The performance imperative: Why edge AI is becoming mission-critical

When milliseconds can mean the difference between success and failure, where you run your AI becomes just as critical as what it does. The recent TechStrong Research report examining edge AI adoption reveals something I’ve observed firsthand across defense, manufacturing, and critical infrastructure sectors: we’re witnessing a fundamental shift in how organizations evaluate AI deployment strategies. Performance—not cost—is now the primary driver for mission-critical applications.

Beyond convenience: The strategic imperative of edge AI

The cloud has been the default choice for AI deployment for years—convenient, scalable, and seemingly cost-effective. The research confirms this pattern, with cloud AI implementations outpacing edge deployments by a 3-to-1 margin.

That dominance is eroding fast. One truth stands out from my work with defense, manufacturing, and infrastructure leaders: when split-second decisions define success, cloud AI’s latency and reliability gaps become dealbreakers. Picture a military drone dodging threats in a warzone—waiting for a cloud server could mean destruction. Or a factory sensor catching a defect—seconds lost could cost millions.

Consider an autonomous military drone navigating hostile territory, a manufacturing quality system preventing costly defects, or a medical device monitoring vital signs. In these scenarios, the round-trip time to the cloud isn’t just an inconvenience—it’s a fundamental liability that can compromise the entire operation.

This isn’t merely a technical preference. It’s a strategic imperative that’s reshaping how forward-thinking organizations approach AI architecture.

The new decision calculus: Performance over cost

What struck me most about the TechStrong findings is how fundamentally the decision calculus has shifted for AI deployments. For 20+ years, cost ruled infrastructure choices—performance was a bonus. Now, that’s flipped: performance trumps cost as AI becomes the heartbeat of real-time operations. This isn’t just a trend—it’s the new benchmark for survival in a high-stakes world.

Why this dramatic shift? As AI transitions from experimental technology to mission-critical applications, organizations are recognizing that the true cost of performance limitations far outweighs the incremental investment in edge capabilities. When a manufacturing line shutdown costs thousands per minute or when battlefield intelligence can save lives, the value equation fundamentally changes.

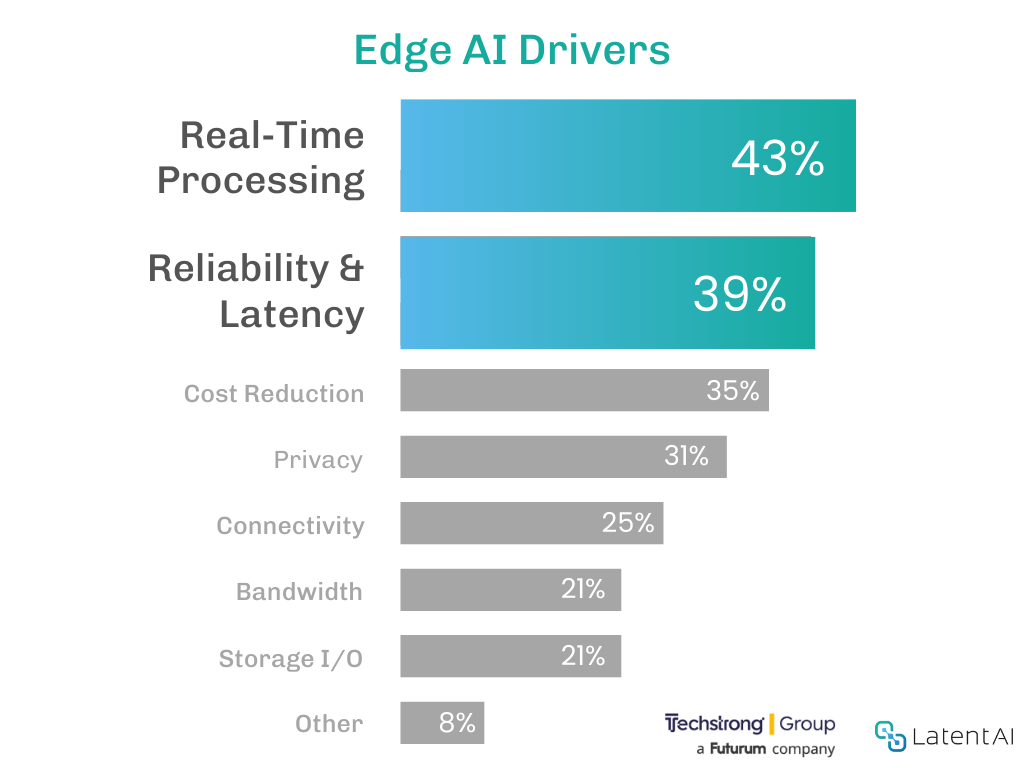

This represents a maturation of the AI market—a recognition that the business value of AI comes not just from its analytical capabilities, but from its ability to deliver insights precisely when and where they’re needed most. For applications requiring real-time processing (cited by 43% of respondents), reliability (39%), and minimal latency (39%), edge computing isn’t merely an option—it’s becoming the only viable approach.

The expertise bottleneck: Why edge AI adoption lags despite clear benefits

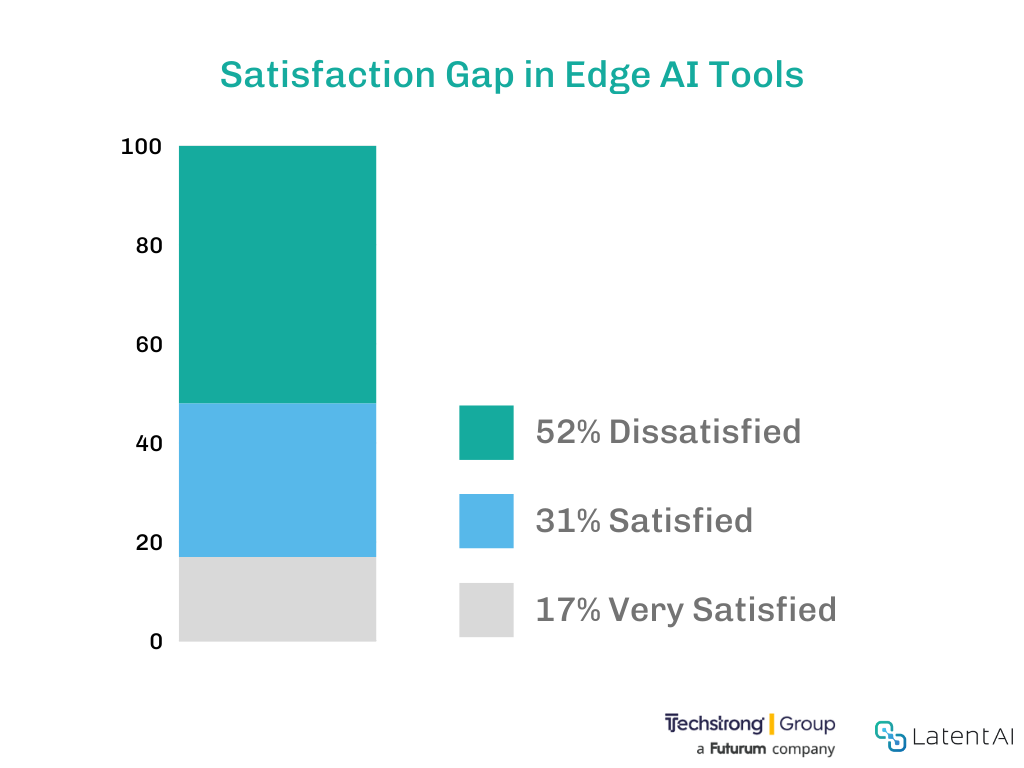

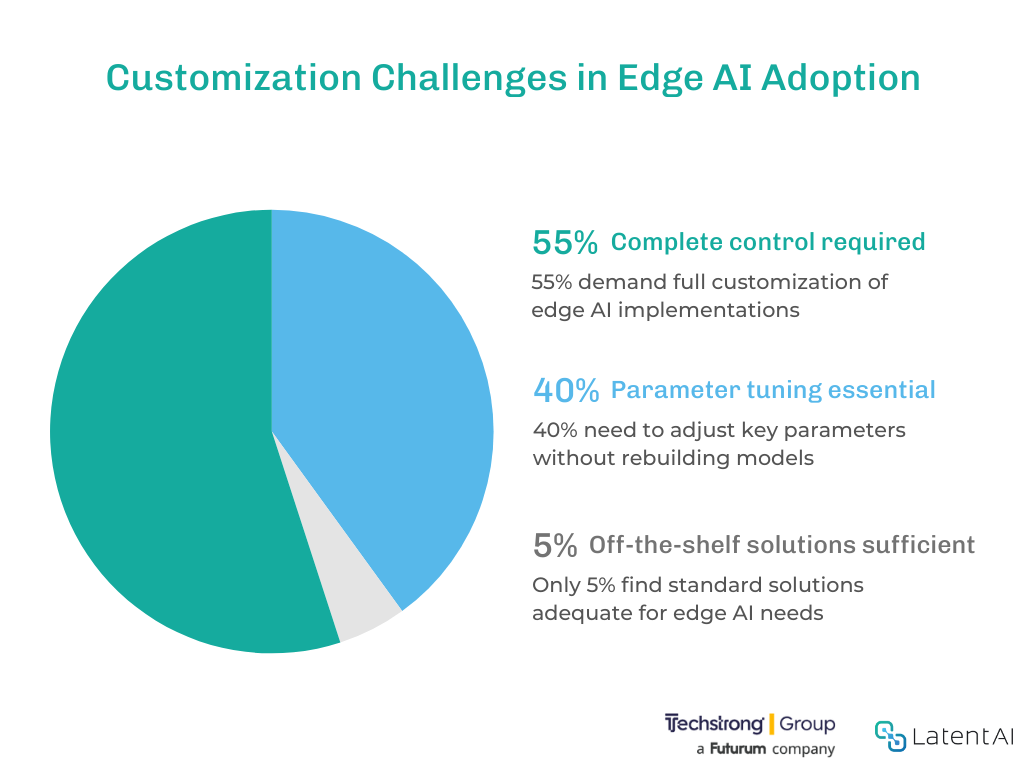

Edge AI’s promise comes with a catch: the organizations hungriest for its benefits often lack the expertise to harness it. The research exposes a stark gap: only 17% are “very satisfied” with edge tools, 95% demand custom solutions over generic ones, and 52% find current platforms lacking.

- A mere 17% of organizations report being “very satisfied” with their edge AI tools

- An overwhelming 95% require customized solutions rather than off-the-shelf offerings

- More than half (52%) express outright dissatisfaction with available tools and platforms

What’s particularly revealing is that the primary barriers aren’t technical limitations but human capital constraints. Over a third of organizations (34%) cite a lack of expertise in developing edge AI systems, with an identical percentage struggling with operational knowledge.

This expertise gap creates a challenging dynamic: organizations recognize the strategic importance of edge AI but find themselves unable to execute effectively. In my discussions with CIOs and technical leaders, I consistently hear the same concern: “We know we need edge capabilities, but we don’t have the specialized talent to implement them at scale.”

Bridging worlds: The emergence of cloud-to-edge development

The most revealing insight from the research may be how organizations are attempting to overcome this expertise gap. Rather than building entirely new development processes for edge AI, they’re seeking to leverage their existing cloud expertise through hybrid approaches. A striking 56% of respondents prefer cloud-based development tools even for edge applications.

This preference signals a critical market need: tools that combine the familiar development experience of cloud environments with automated edge optimization capabilities. As Guy Currier notes in the report, “The most successful platforms will be those that marry cloud-based development environments with automated edge optimization capabilities, effectively hiding the complexity while preserving the control that organizations clearly demand.”

Take a military unit updating drone AI in a combat zone: no cloud, no margin for error. The answer isn’t a steep learning curve; it’s tools that feel like cloud workflows but master edge optimization under the hood. This hybrid model isn’t just practical, it’s the future of scalable AI dominance.

This represents a fundamental shift in how we think about the edge AI development lifecycle. Rather than treating edge deployment as a completely separate domain requiring specialized expertise, we’re seeing the emergence of unified platforms that abstract away complexity while maintaining the control that mission-critical applications demand.

Enabling the next wave of AI innovation

The research highlights a decisive moment in AI’s evolution—a transition from cloud-first to edge-aware development. For organizations building mission-critical AI applications, several imperatives emerge:

- Rethink AI’s price tag: It’s not just hardware—it’s latency’s hidden toll. For real-time needs, edge often outshines cloud in total value, upfront costs be damned.

- Seek platforms that abstract complexity without sacrificing control. The sweet spot in edge AI tools combines automated optimization with the customization capabilities that organizations overwhelmingly demand. Look for solutions that handle the complex technical challenges of edge optimization while providing fine-grained control over critical parameters.

- Build an edge-aware development strategy. Rather than treating edge deployment as an afterthought, successful organizations are integrating edge considerations throughout their AI development lifecycle—from initial model selection to ongoing updates and maintenance.

- Invest in expertise-amplifying technology. Bet on tools that amplify your team, not replace them. With talent scarce, pick platforms that turn cloud pros into edge masters—fast.

In this next AI era, victory won’t go to the biggest data centers or the deepest benches—it’ll belong to those who fuse cloud fluency with edge precision. The prize? AI that acts exactly where and when it must. Ready to lead? Start bridging now—download Leveraging the Edge and see the data for yourself.

Jags Kandasamy is CEO and Co-founder of Latent AI, a company focused on optimizing AI for edge devices. For the complete research findings on edge AI adoption, click below to download the report, “Leveraging the Edge When AI Must Be Real-Time, Reliable, and Low Latency“.