Developers

LEIP DePLOY

Standardized runtime for your edge devices

Get a secure, standardized runtime engine for frictionless edge deployment.

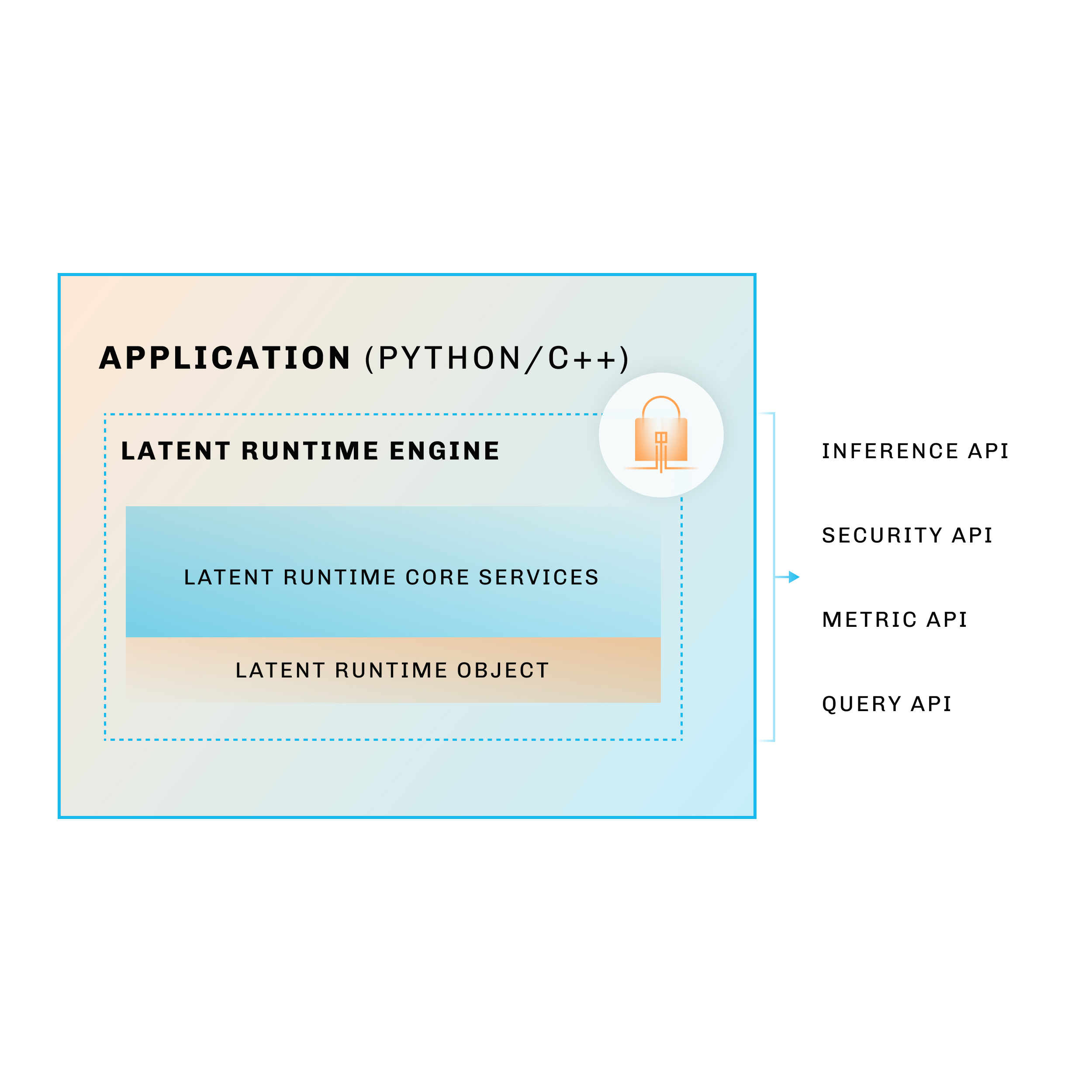

OVerview

AI models powered at the edge

The Latent Runtime Engine demystifies edge AI model deployment and management.

Deploy to multiple hardware devices, monitor performance, and update your compiled models, all with a single runtime engine. With a consistent, universal API, you can manage a heterogeneous hardware environment and respond to real-world conditions without changing a single line of application code.

Features

Standardize AI model deployment with a secure, lightweight runtime built for the edge.

Friction-free installation

Install a single runtime for all of your devices.

- Standardized runtime portable to multiple hardware platforms, including NVIDIA Jetson Orin and Xavier, Android Snapdragon, CUDA, ARM, and x86 running Linux.

- Update or replace models with no changes to your runtime engine.

- A unified API for easy integration with third-party applications.

Built-in model security

Keep your models safe and secure with the advanced protection features in our AI development software.

- Deter unauthorized use or distribution of models with digital watermarking, enabling model provenance validation.

- Protect models from theft or compromise with an encrypted runtime.

- Ensure model integrity and prevent tampering with version tracking.

Monitor AI models in production

Ensure optimal model performance with comprehensive monitoring tools and real-time diagnostics from a single development platform.

- Seamlessly measure performance during deployment with real-time diagnostic metrics.

- Update or replace model with no changes to your application.

- Easily move models from platform to platform with cross-hardware compatibility.

Friction-free installation

Install a single runtime for all of your devices.

- Standardized runtime portable to multiple hardware platforms, including NVIDIA Jetson Orin and Xavier, Android Snapdragon, CUDA, ARM, and x86 running Linux.

- Update or replace models with no changes to your runtime engine.

- A unified API for easy integration with third-party applications.

Built-in model security

Keep your models safe and secure with the advanced protection features in our AI development software.

- Deter unauthorized use or distribution of models with digital watermarking, enabling model provenance validation.

- Protect models from theft or compromise with an encrypted runtime.

- Ensure model integrity and prevent tampering with version tracking.

Monitor AI models in production

Ensure optimal model performance with comprehensive monitoring tools and real-time diagnostics from a single development platform.

- Seamlessly measure performance during deployment with real-time diagnostic metrics.

- Update or replace model with no changes to your application.

- Easily move models from platform to platform with cross-hardware compatibility.

Simplify AI deployment with LEIP Deploy

In this video, Latent AI embedded engineers Natalia Jurado and Puru Saravanan demonstrate how to easily deploy AI models to a wide range of edge devices using LEIP Deploy. Learn how to streamline the deployment process, accelerate inference times, and ensure reliable performance with LEIP Deploy.

Ready to get started?

Schedule a meeting with an AI expert today.

Resources

Learn more about security and management features of the Latent Runtime Engine.

LEIP: Faster Inference and Better Performance. Accelerated inference is the key to real-time vehicle detection, lower latency, and enhanced accuracy.

Building resilient edge AI with composable, secure, and adaptable models

Securing your AI models: Watermarking with LEIP Optimize

FAQ

FAQs

Learn how the standardized runtime engine offers frictionless edge deployment.

How do I deploy AI models using the Latent Runtime Engine?

To deploy a model using the Latent Runtime Engine (LRE), simply install the LRE on your edge device and load your compiled model. You can easily swap models with a single step and make consistent API calls directly to the LRE, regardless of the model or device type.

How to choose the right AI runtime engine for your project?

The right AI runtime engine depends on factors like hardware and model compatibility, performance needs, and optimization support. The Latent Runtime Engine (LRE) is ideal for moving from prototyping to production because it offers a single runtime for diverse models, hardware, and optimizations.

How do I integrate the Latent Runtime Engine with other software systems?

The Latent Runtime Engine has a unified API that is easy to integrate with other third-party applications.

What kinds of performance metrics are available with LEIP Deploy?

LEIP Deploy offers a range of tools to help maximize performance of your compiled model, including model metadata, inference execution time, and runtime options like TensorRT support.

How does LEIP Deploy handle deployment to multiple hardware targets?

LEIP Deploy simplifies heterogeneous deployment by providing a single runtime engine that supports a variety of models, optimization techniques, and hardware targets.

Book a Custom Demo

LEIP tools simplify the process of designing edge AI models. They provide recipes to kickstart your design, automate compilation, and target hardware, eliminating the need for manual optimization.

Schedule a personalized demo to discover how LEIP can enhance your edge AI project.