Developers

LEIP OPTIMIZE

Edge AI model optimization, simplified

Smart tools that handle the complex task of hardware and software optimization for your ML models.

OVerview

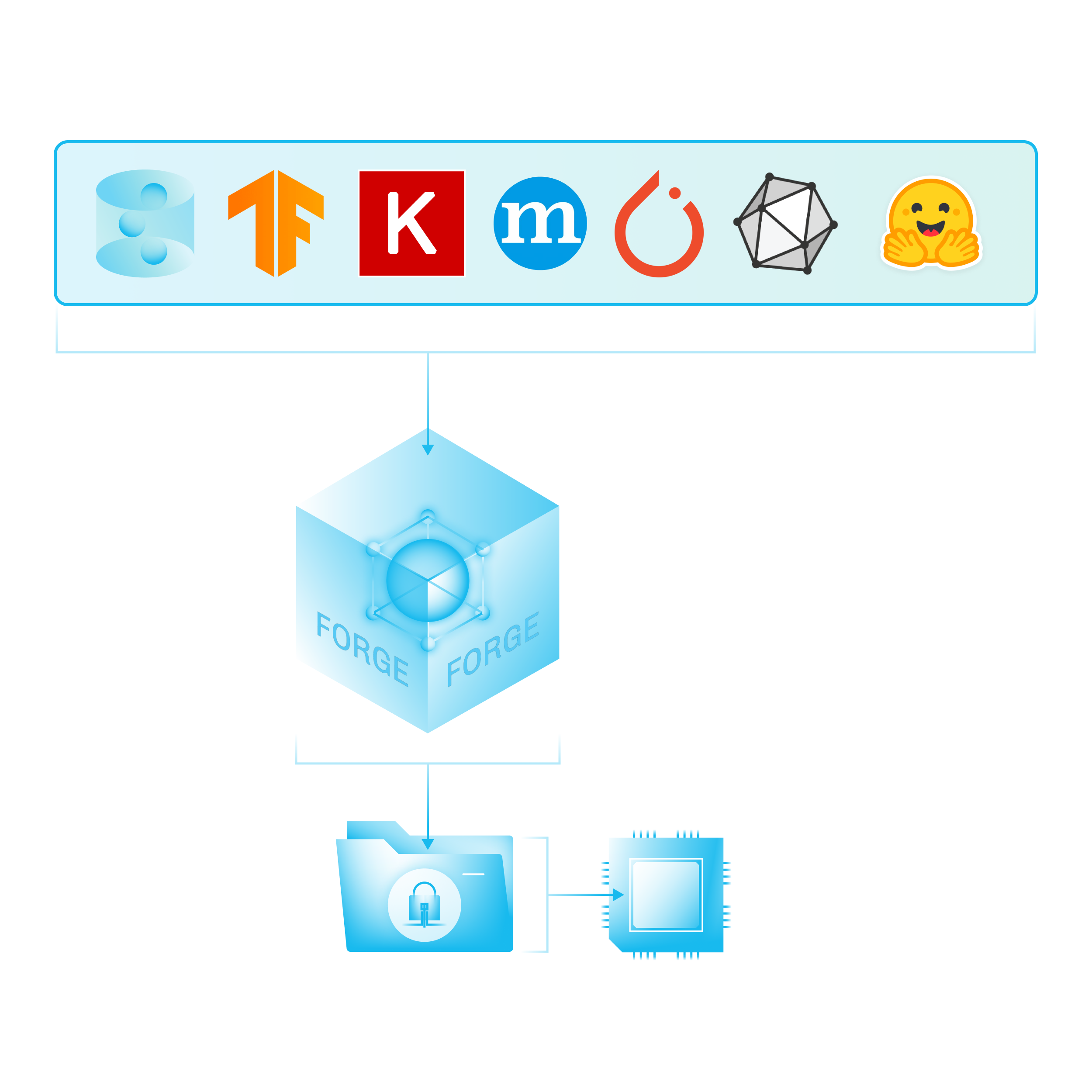

Introducing Forge

Compile and deploy edge AI to more devices than ever before with Forge.

Forge is the central technology within LEIP Optimize and your go-to tool for simplifying the process of getting your AI models ready for edge devices. It automates the difficult and time-consuming tasks of quantizing and compiling your models, saving you time and effort.

Benefits

Optimize for any device, faster.

Features

Don’t fret model-hardware configuration, optimize it.

Automate optimization with Forge

Forge makes AI deployment to the edge straightforward and powerful.

- Target hardware without in-depth hardware knowledge or expertise.

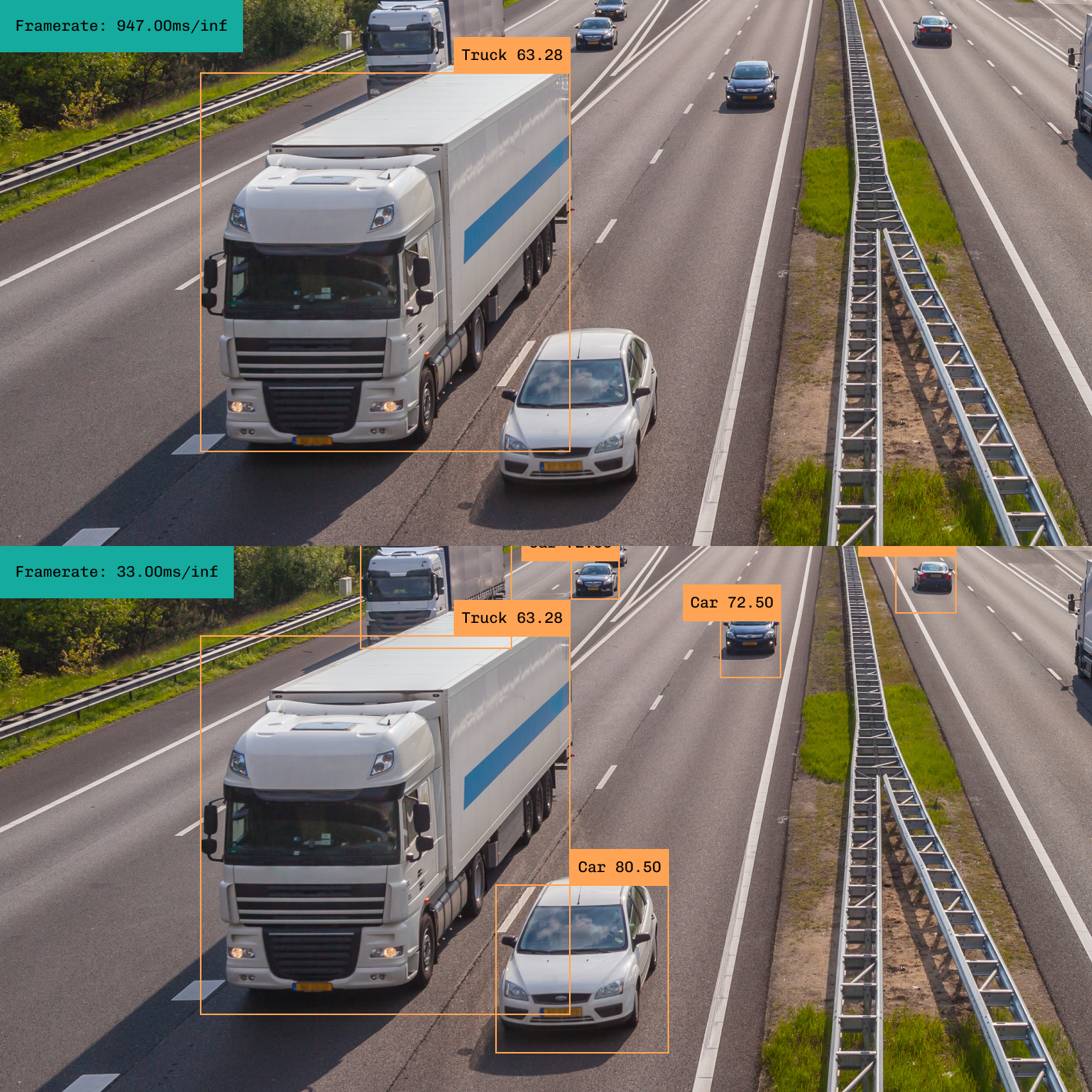

- Improve edge AI inference speed by optimizing the model and leveraging acceleration on your target hardware.

- Convert a machine learning model to a single portable file.

- Build your own tools on top of Forge.

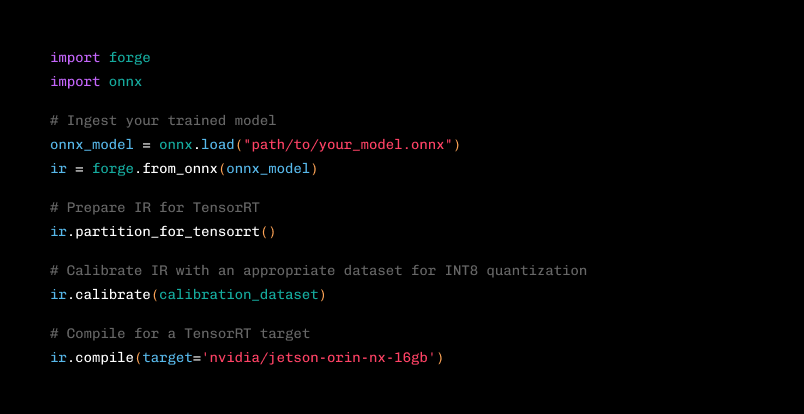

Agnostic model support

Easily integrate your AI model into LEIP Optimize.

- Supports computer vision models and most models with dynamic tensors, such as transformers.

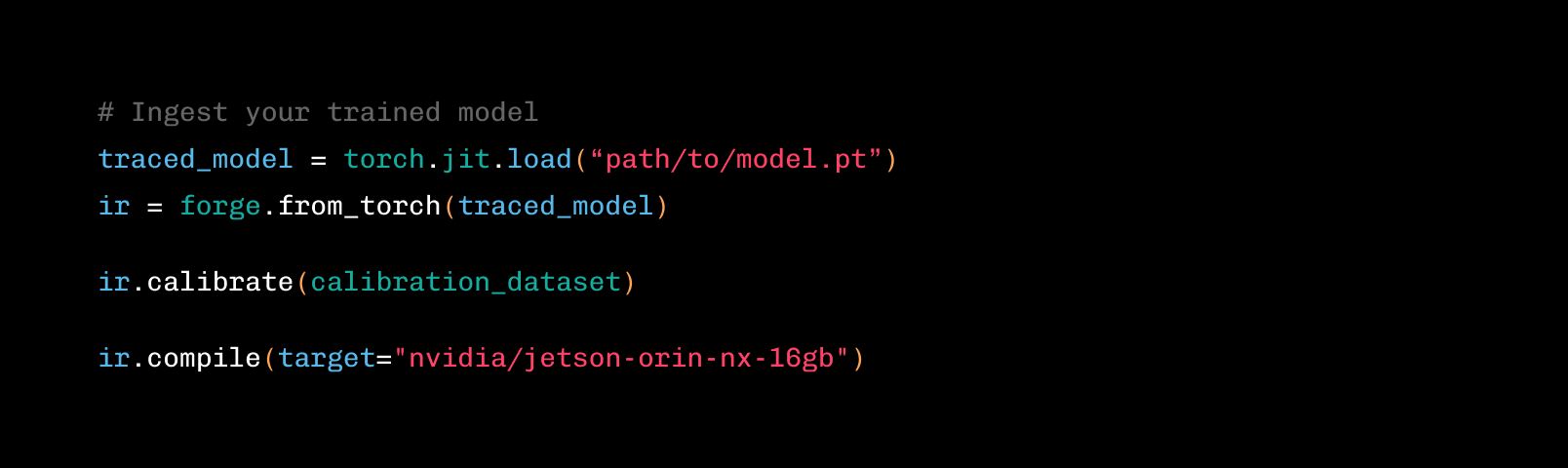

- Ingest models from popular frameworks like Tensorflow, PyTorch, and ONNX, including the majority of computer vision models on Hugging Face.

- Seamless integration with your current ML environment to give you tooling flexibility and familiarity.

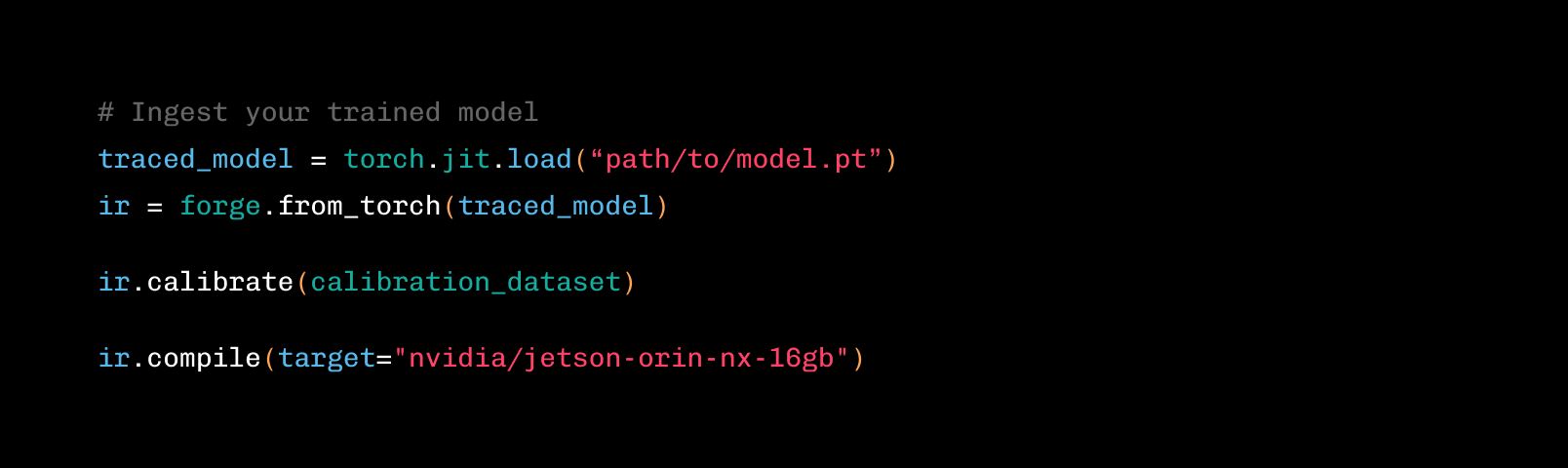

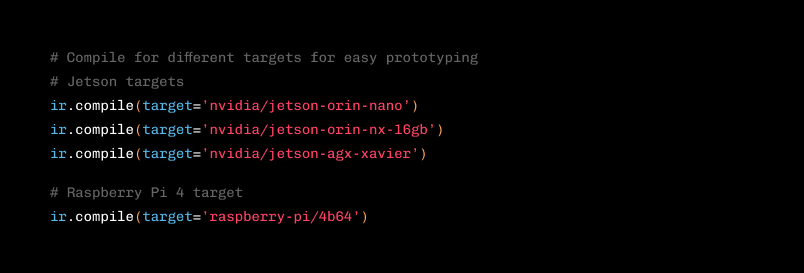

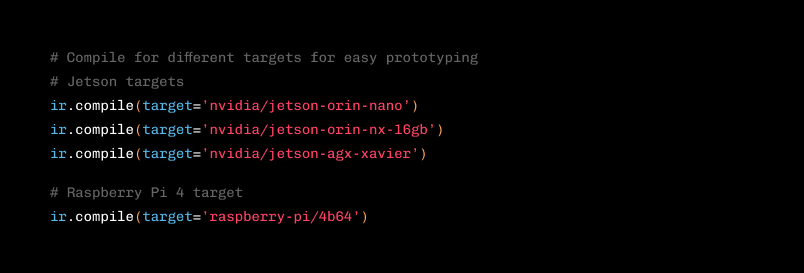

Accelerate prototyping

Streamlined model-hardware optimization that enables newcomers and experts to prototype rapidly and accelerate deployment.

- Optimize AI models using hardware accelerators for different target hardware.

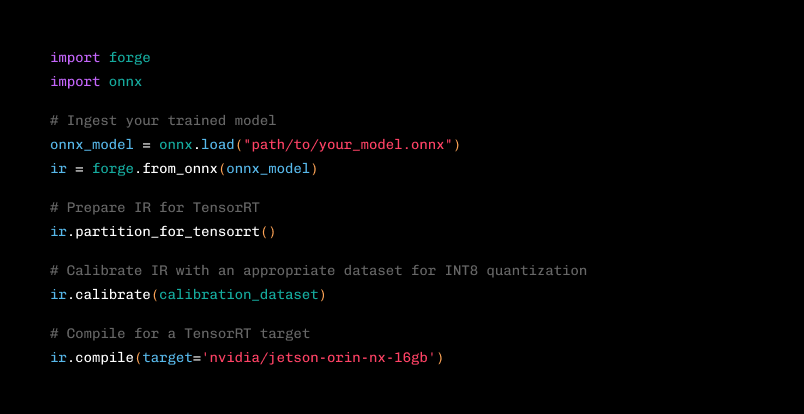

- Speed up model predictions via quantization and shrink to run in lower bit precision (INT8) while preserving accuracy.

- Script optimization and compilation jobs for reusability and automation.

Expert precision control

Tools that allow experts to fine-tune and secure AI models for peak performance.

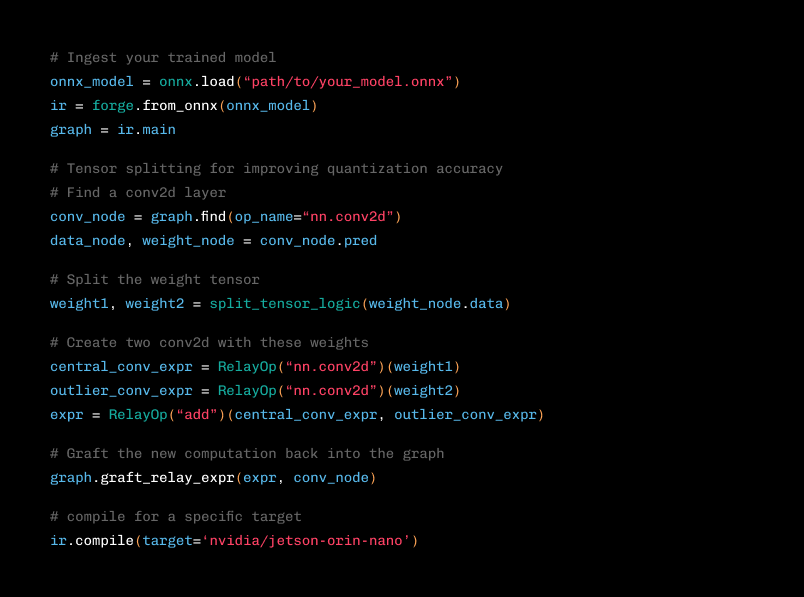

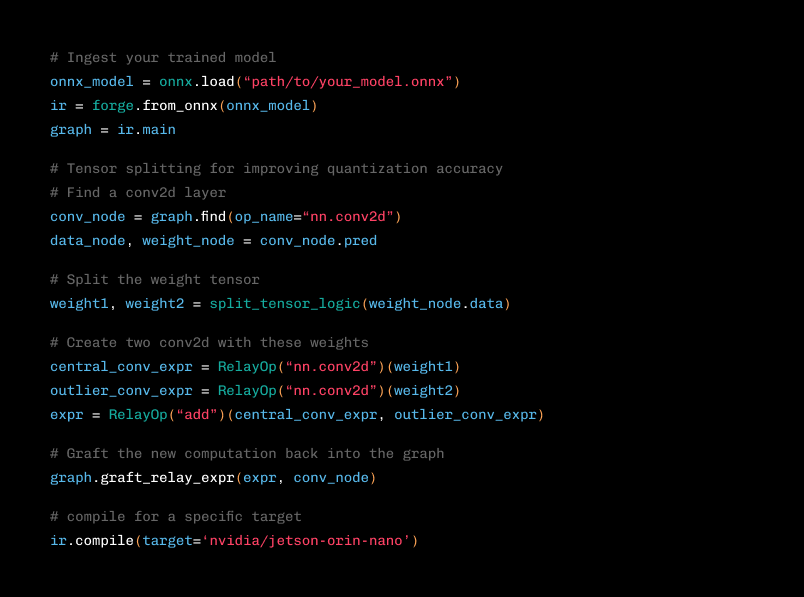

- Manipulate your models with our direct graph manipulation tool to debug and modify incompatible models for specific hardware.

- Explore the design space for further optimization of AI inference at the edge.

- Inject watermarks to protect your models.

Automate optimization with Forge

Forge makes AI deployment to the edge straightforward and powerful.

- Target hardware without in-depth hardware knowledge or expertise.

- Improve edge AI inference speed by optimizing the model and leveraging acceleration on your target hardware.

- Convert a machine learning model to a single portable file.

- Build your own tools on top of Forge.

Agnostic model support

Easily integrate your AI model into LEIP Optimize.

- Supports computer vision models and most models with dynamic tensors, such as transformers.

- Ingest models from popular frameworks like Tensorflow, PyTorch, and ONNX, including the majority of computer vision models on Hugging Face.

- Seamless integration with your current ML environment to give you tooling flexibility and familiarity.

Accelerate prototyping

Streamlined model-hardware optimization that enables newcomers and experts to prototype rapidly and accelerate deployment.

- Optimize AI models using hardware accelerators for different target hardware.

- Speed up model predictions via quantization and shrink to run in lower bit precision (INT8) while preserving accuracy.

- Script optimization and compilation jobs for reusability and automation.

Expert precision control

Tools that allow experts to fine-tune and secure AI models for peak performance.

- Manipulate your models with our direct graph manipulation tool to debug and modify incompatible models for specific hardware.

- Explore the design space for further optimization of AI inference at the edge.

- Inject watermarks to protect your models.

Compressing AI models for edge deployment with LEIP Optimize

In this video, Adnaan Yunus, a machine learning engineer at Latent AI, talks about the challenges of deploying AI models on resource-constrained edge devices. He’ll show you how LEIP Optimize can help you overcome these challenges by compressing models, accelerating inference, and optimizing for power efficiency. Watch the demo to see LEIP Optimize in action.

Ready to get started?

Schedule a meeting with an AI expert today.

Resources

Discover how LEIP Optimize can help you quickly configure and deploy models to your target hardware.

FAQ

FAQs

Learn how LEIP Optimize helps you prep models for edge devices.

Can Latent AI help optimize models for speed and efficiency on edge devices?

Yes. Latent AI optimizes models to execute faster, use fewer resources, and run more securely by simplifying model-hardware optimization for you with tools in the LEIP Optimize framework.

How can we reduce model size and complexity to fit on edge devices?

LEIP Optimize both quantizes and compresses your model in preparation for deployment. In addition LEIP Optimize compiles your model using specific hardware optimization libraries provided by your target hardware.

What types of AI models does Latent AI support?

You can import models into LEIP Optimize from ONNX, PyTorch, TensorFlow, TensorFlow Lite, and Keras.

What hardware does Latent AI support?

Latent AI supports a variety of edge platforms including NVIDIA Jetson Orin and Xavier, Android Snapdragon, CUDA, ARM, and x86 running Linux.

How can Latent AI ensure data privacy and security when training models on edge devices?

The LEIP platform helps you to deter unauthorized use or distribution of models with digital watermarking, protect models from theft or compromise with an encrypted runtime, and ensure model integrity and prevent tampering with version tracking.

Book a Custom Demo

LEIP tools simplify the process of designing edge AI models. They provide recipes to kickstart your design, automate compilation, and target hardware, eliminating the need for manual optimization.

Schedule a personalized demo to discover how LEIP can enhance your edge AI project.