Developers

LEIP Design

Predictable, effortless AI every time

Easy-to-use AI development software that helps you pick the perfect AI model and hardware and fine-tune it for your needs.

OVerview

What is a recipe?

Recipes are pre-tested configurations that take the guesswork out of choosing the right model-hardware combination for your project.

For developers who need AI modeling software that will help them to quickly prototype and fine-tune AI models for edge applications, recipes offer a quick and easy way to get your AI models up and running. Recipes help you identify the best model and hardware for your application.

Benefits

Launch intuitive, reliable ML solutions at the edge.

Features

Build production-ready models in no time.

Simple, quick model design

Say goodbye to guesswork! Latent AI’s library of over 1,000 pre-tested model-hardware combinations makes it easy to find the perfect fit for your project.

-

Choose from recipes benchmarked for on-device performance, inference speed, and memory footprint.

- Hook your data into any recipe with ease.

- Fine-tune your recipes by swapping, updating, and adding ingredients as needed.

Rapid prototyping for any device

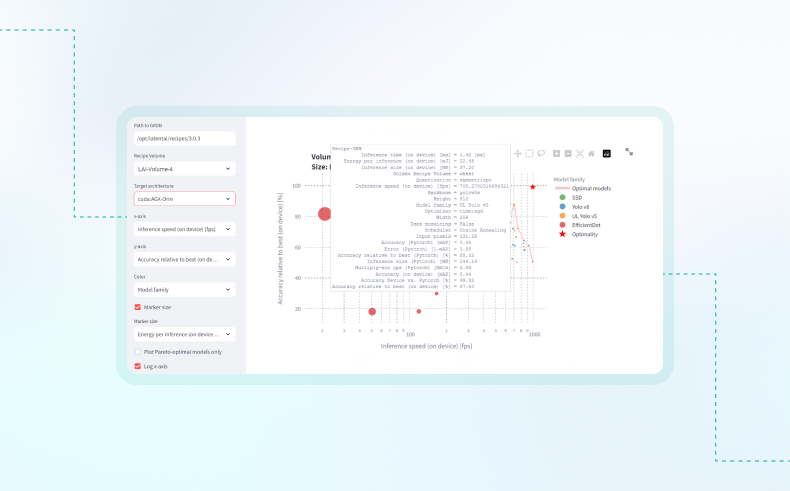

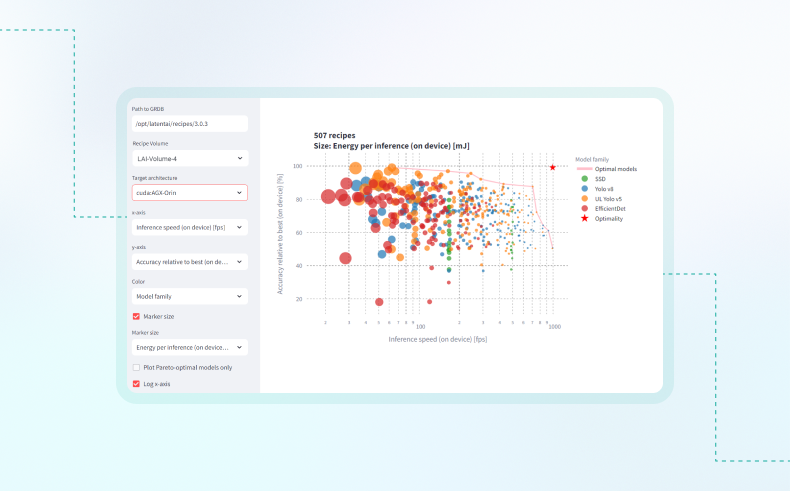

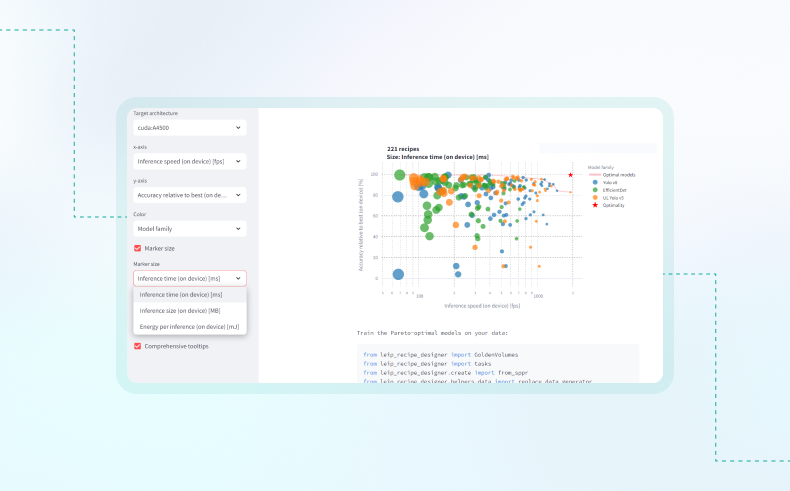

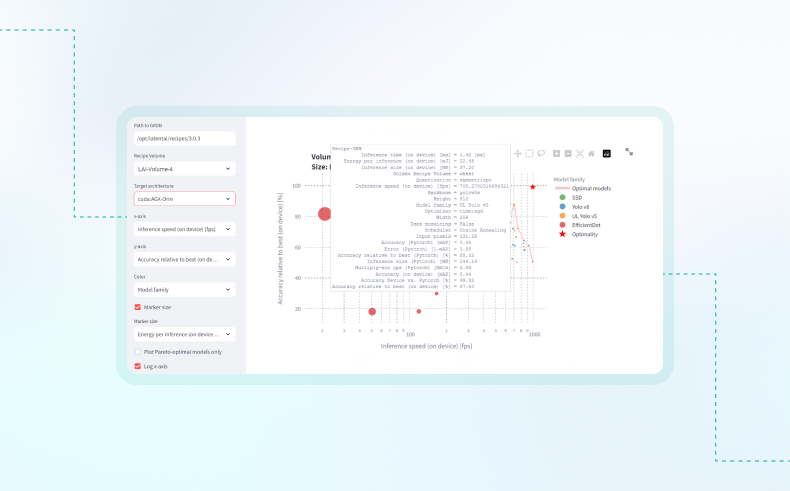

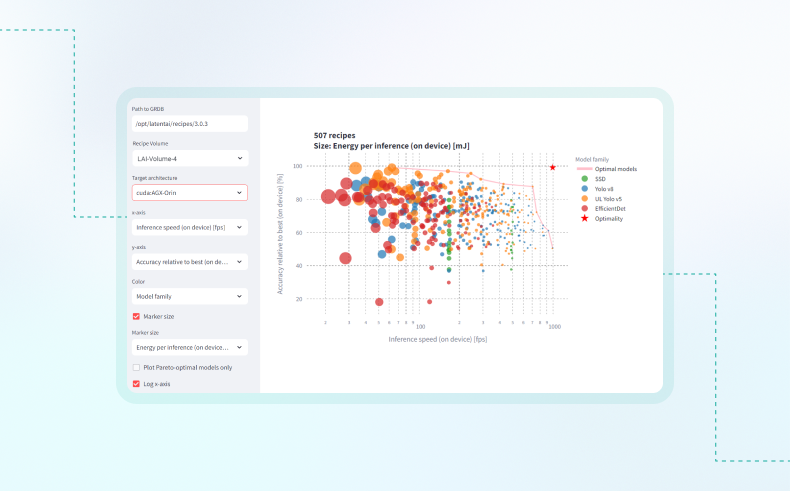

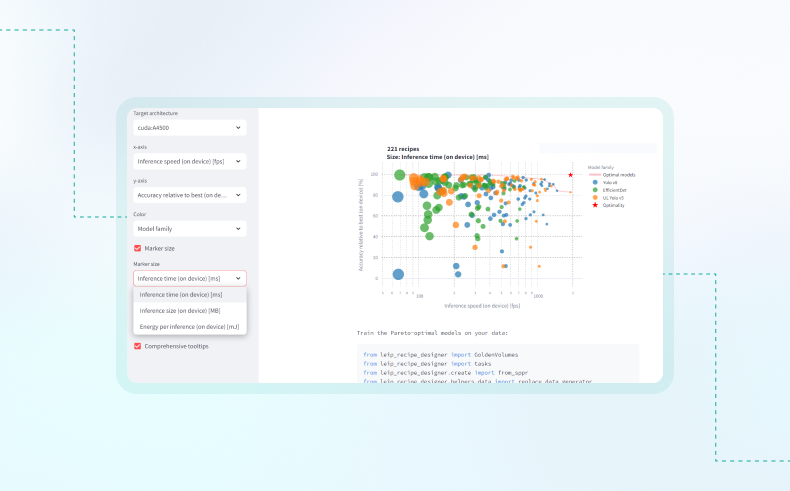

LEIP Design’s powerful recipe designer provides you with all the metrics to make prototyping effortless.

- Analyze size, accuracy, and power trade-offs interactively to meet your exact criteria.

- Target multiple hardware platforms without restarting the design process.

- Explore alternatives and validate feasibility before you train.

Retrain and reuse in minutes, not hours

Keep your AI models up to date with AI training software that speeds retraining and redeployment.

- Quickly update and retrain your model with fresh data by reusing your existing pipeline.

- Effortlessly swap models, adjust hyperparameters, or change hardware targets with a modular workflow.

- Push model updates to edge devices with no change to dependencies, application, or hardware.

Simple, quick model design

Say goodbye to guesswork! Latent AI’s library of over 1,000 pre-tested model-hardware combinations makes it easy to find the perfect fit for your project.

-

Choose from recipes benchmarked for on-device performance, inference speed, and memory footprint.

- Hook your data into any recipe with ease.

- Fine-tune your recipes by swapping, updating, and adding ingredients as needed.

Rapid prototyping for any device

LEIP Design’s powerful recipe designer provides you with all the metrics to make prototyping effortless.

- Analyze size, accuracy, and power trade-offs interactively to meet your exact criteria.

- Target multiple hardware platforms without restarting the design process.

- Explore alternatives and validate feasibility before you train.

Retrain and reuse in minutes, not hours

Keep your AI models up to date with AI training software that speeds retraining and redeployment.

- Quickly update and retrain your model with fresh data by reusing your existing pipeline.

- Effortlessly swap models, adjust hyperparameters, or change hardware targets with a modular workflow.

- Push model updates to edge devices with no change to dependencies, application, or hardware.

Streamline your AI workflow with LEIP Design

In this video, Sai Mitheran, a machine learning engineer at Latent AI, demonstrates how LEIP Design simplifies the complex process of building and optimizing AI models. Learn how LEIP Design’s modular recipes, rapid prototyping capabilities, and hardware optimization features can accelerate your AI development.

Resources

Discover how LEIP Design provides predictable, effortless design every time.

FAQ

FAQs

Learn how LEIP Design helps you build production ready models.

How does Latent AI help to design AI models?

Latent AI provides AI creation software in LEIP Design. LEIP Design jumpstarts your model development process by helping you to select a pretrained model that is best suited to your application and the hardware target you intend to use in your business.

How does Latent AI support AI training?

LEIP Design is AI training software that allows you to select a model and hardware target and train the model to the dataset you provide.

What kinds of AI models does Latent AI support?

Latent AI primarily supports computer vision AI models and most models with dynamic tensors, such as transformers. Our growing recipe library currently consists of detectors and classifiers.

What format does my data need to be in?

Latent AI can train models to your structured data best if it is in COCO, PASCAL VOC, or YOLO formats.

Can Latent AI help me update my ML model after I’ve deployed it?

Yes. LEIP Design provides a trusted, reusable MLOps pipeline so that you can quickly update and redeploy models when needed.

Book a Custom Demo

LEIP tools simplify the process of designing edge AI models. They provide recipes to kickstart your design, automate compilation, and target hardware, eliminating the need for manual optimization.

Schedule a personalized demo to discover how LEIP can enhance your edge AI project.

Ready to get started?

Schedule a meeting with an AI expert today.