See how LEIP enables you to design, optimize and deploy AI to a variety of edge devices–at scale. Schedule a Demo

Find Your Best Model Using LEIP Recipes

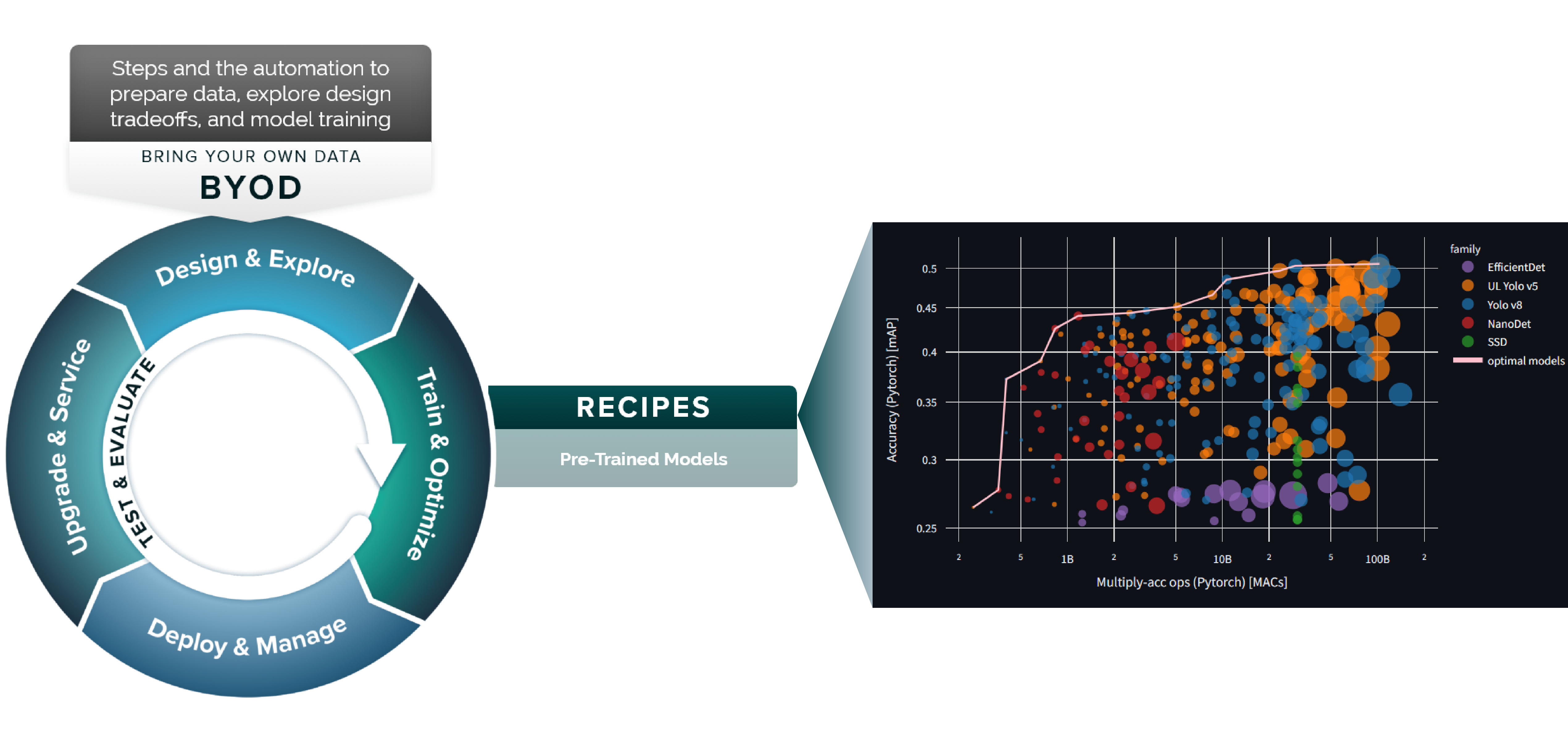

Researching which hardware best suits your AI and data can be a time consuming and frustrating process that requires machine learning (ML) expertise to get right. LEIP accelerates time to deployment with Recipes, a rapidly growing library of over 50,000 pre-qualified ML model configurations that let you quickly compare performance across different hardware targets (CPUs, GPUs, FPLAs, etc.) to find the best combination of models and hardware for your data.

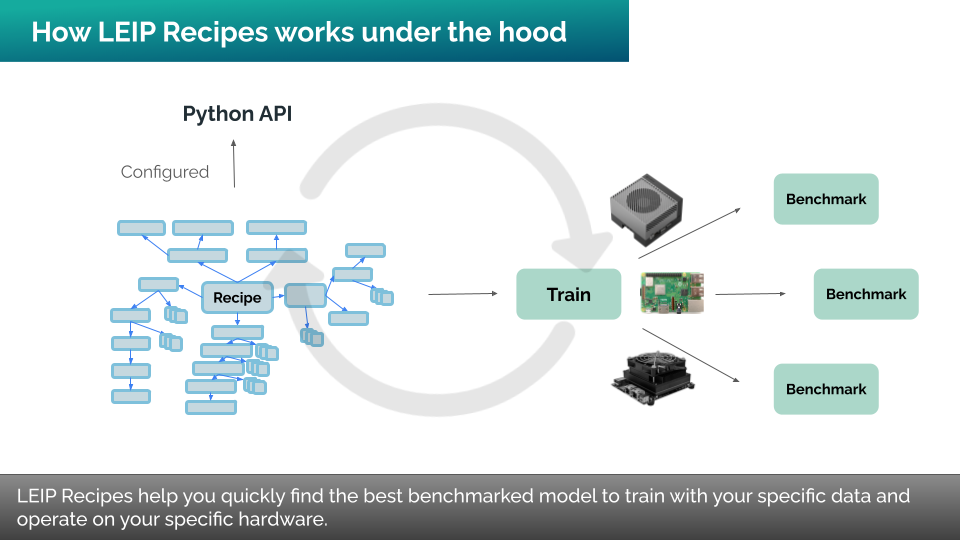

Recipes are an intersection in the search space of machine learning models, data formats, optimization schemes, and deployment targets, and define everything about the end to end modeling and deployment process. They speed the development process by allowing the user to select a model to use with their data and then provide pre-configured steps and settings to deliver efficient performance of that model on a desired target platform. A recipe defines the entire ML workflow with components including models, datasets, dataset ingestors for popular data formats (for users to ingest their own data), data augmentation techniques, training “tricks,” optimizers, learning rate schedulers, visualization options, and more. And there can be multiple components within each recipe.

Users load Recipe components via a Python API. Providing an API that abstracts the intricacies of model selection and configuration ensures consistency across diverse model families and deployment scenarios, and automates the search for optimal ML component combinations.

LEIP Recipes

Latent AI has developed a set of benchmarked Recipes that are pre-qualified to work with certain frameworks and performance expectations. LEIP Recipes can dramatically speed how you design and deploy your computer vision. Latent AI’s engineers have automated the tedious process of testing various recipe combinations to identify those that yield the most precise models, saving users the effort of repeated trial and error.

Our process is comprehensive: we take open-source models, finely tune them, and rigorously test them across an array of hardware options. This diligence allows us to assess each recipe’s training duration, inference speed (the time it takes for a model to make a prediction), memory usage, and power consumption. These metrics are crucial for providing users with the information needed to choose the most suitable solution without engaging in time-consuming research.

We focus on four attributes with these runs:

- How long does it take to train a particular model for the particular hardware?

- What is the INF speed of that particular model on that hardware?

- How much memory does it take?

- How much power does it draw?

Answering these four questions allows the user to pick what is the best solution for them without having to become an ML or hardware expert. Some LEIP Recipes prioritize speed, offering rapid inference times at the cost of some accuracy—a trade-off suitable for time-critical applications. Others prioritize precision, delivering highly accurate results at the expense of longer inference times, ideal for scenarios where accuracy is non-negotiable. The key is selecting the one that meets your performance and accuracy requirements.

What LEIP Recipes Can Do For You

Users can simply browse through the list of LEIP Recipes that have already been categorized by the specific task that is important to their needs.

The major benefit of LEIP Recipes is that they have already been previously configured for a specific task. Users can easily experiment with multiple LEIP Recipes to analyze and evaluate which one will work best for their functionality. See how you can quickly find the optimal model to train on your data with Recipes and easily switch hardware without retraining here.