Developers

LATENT AI EFFICIENT INFERENCE PLATFORM (LEIP)

Intuitive for beginners,

powerful for experts.

Most MLOps platforms are complex. LEIP simplifies the process, empowering developers of all skill levels to build secure models ultra-fast.

MODEL PERFORMANCE

How we compare.

11.7x

Faster inference speed with optimization on NVIDIA Jetson Orin AGX.

10x

Energy reduction per inference speed on NVIDIA Jetson Orin AGX.

3x

Reduction in GPU RAM on NVIDIA Jetson Orin AGX.

MODULES

We make it easy to model with certainty and deploy with confidence.

LEIP Design

Easy-to-use model development software that helps you pick the perfect AI model and hardware and fine-tune it for your needs.

- Pick from a library of pre-tested model-hardware combinations to find the perfect fit for your project.

- Analyze size, accuracy, and power trade-offs interactively to meet your exact criteria.

- Keep your AI models up to date with quick retraining and redeployment.

LEIP Optimize

Smart tools that handle the complex task of hardware and software optimizing of your machine learning models, so you don’t have to.

- Integrate your AI model into LEIP Optimize with ease.

- Rapidly prototype and accelerate deployment with preconfigured model-hardware optimization.

- Fine-tune and secure your models for peak performance.

LEIP Deploy

A single runtime to simplify model deployment, monitoring, and updates.

- Easily install a standardized runtime that is portable to multiple hardware platforms.

- Keep your models safe and secure with our advanced protection features.

- Monitor model performance with real-time insights into model settings and execution efficiency necessary for model management.

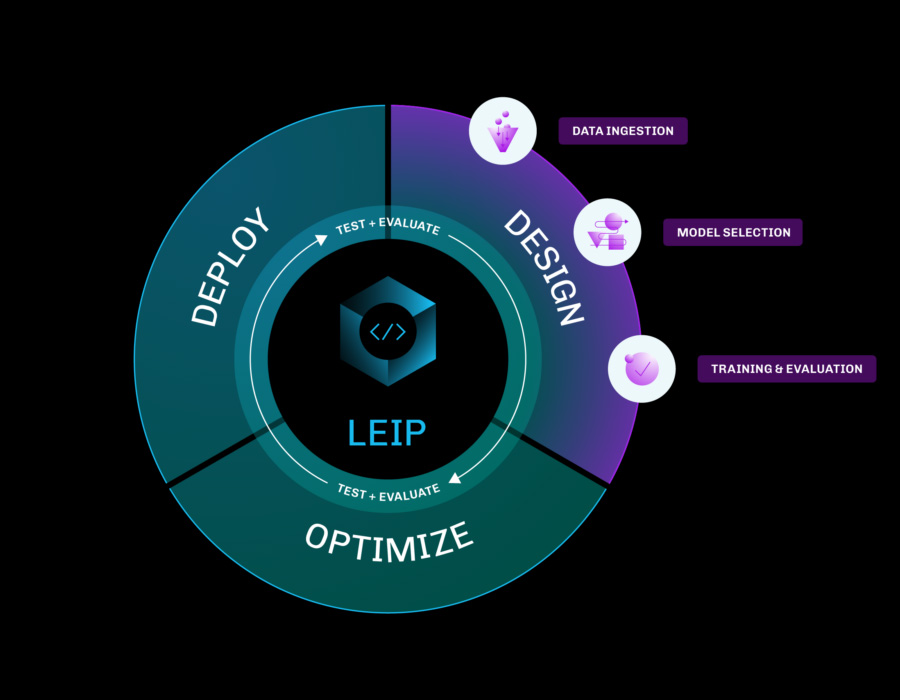

machine learning Workflow

From lab to life, seamlessly.

With LEIP, you can bring your own data or model and quickly build powerful AI solutions.

Data Ingestion: LEIP allows you to ingest data specific to your business problem.

Model Selection: Choose the best model for your needs from 1,000 pre-tested model-hardware configurations.

Training & Evaluation: Quickly tune and validate pre-trained models to your dataset.

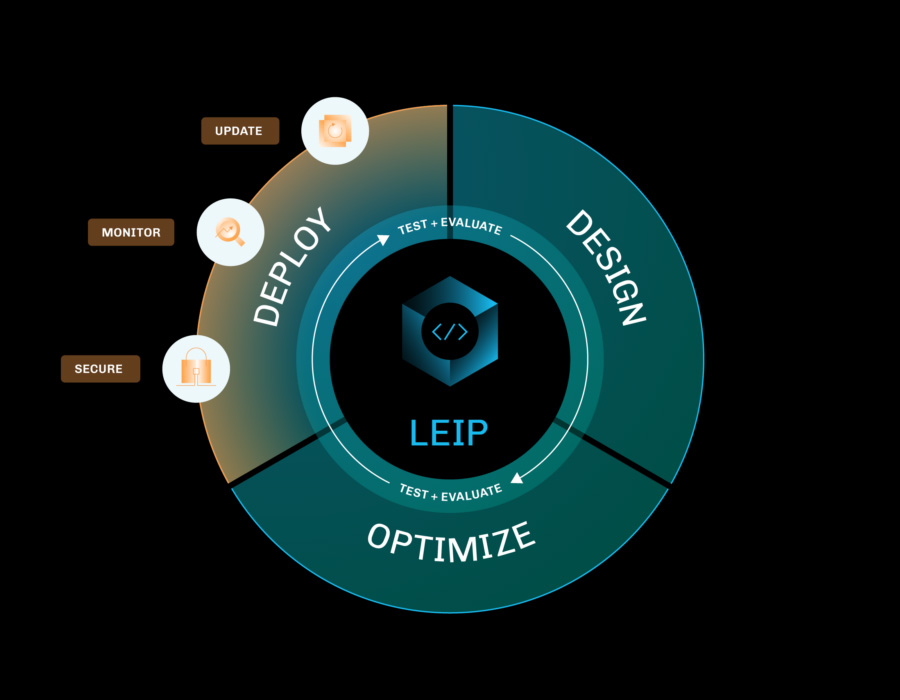

Model Compression: LEIP Optimize both quantizes and compiles your model for optimal performance on edge devices.

Hardware Compilation: With Forge, performance optimization using specific hardware optimization libraries for your target hardware during compilation is simpler and faster.

Secure: Apply watermarking, encryption, and version tracking to your model.

Monitor: Ensure optimal model performance with comprehensive model management tools and real-time diagnostics in the Latent Runtime Engine.

Update: Maintain model accuracy by fine-tuning to updated data and redeploying.

Benefits

Intuitive for beginners and experts alike

LEIP tools simplify complex AI tasks, making it easy for beginners to start and for experts to work efficiently. Our proven configurations allow you to quickly create production models and provide granular control over every step of the machine learning workflow. And it’s all preconfigured, so you can build, test, and iterate without specific hardware expertise.

Benefits

Design, test, deploy, repeat

Repeatability is essential to scaling machine learning projects. LEIP promotes reuse at every stage, enabling you to create a reliable pipeline for easy retraining and updates. You can save, share, and adjust your workflows, effortlessly tracking the parameters applied to all model versions and hardware targets. And when it’s time to roll out updates, our standardized runtime engine ensures a smooth redeployment.

Benefits

Fits easily into your existing setup

LEIP seamlessly integrates into your existing ML environment, minimizing disruption and providing the ML tools your development teams need to succeed. Whether you’re starting with a dataset or already have a model in production, you can use LEIP to create ML solutions that work for you. And if you adjust your setup, LEIP’s flexible, modular tooling adapts to fit your changing needs.

engagement model

Your AI, your way: flexible deployment models to streamline your AI capabilities.

Onsite

Streamline your ML development with tools that integrate into your existing enterprise software development environment.

Cloud

Accelerate AI software development with LEIP Cloud, the no-code platform for all skill levels.

Mobile

Flexible deployment to modify, retrain, and deploy edge AI directly in the field.

services

Tackle your machine learning projects with confidence.

End-to-end ML implementation

Use our deep expertise in bringing AI to embedded edge devices to expedite your edge AI development.

- Consult and architect your edge AI solution.

- Integrate with data providers and data labeling partners.

- Assist in data ontology and labeling.

- Design, optimize, and deploy ML models.

- Benchmark your models against various hardware targets.

- Secure your model runtime with watermarking and encryption.

- Reduce your time and network bandwidth to update machine learning models.

- Monitor model life cycles with runtime diagnostics.

Optimization and deployment

Get hands-on support where you need it—bringing your model through LEIP Optimize and deploying to your target hardware.

- Migrate cloud-only AI services to the edge.

- Reduce operational cost of AI inference with optimization for multiple hardware targets.

- Secure your model runtime with watermarking and encryption.

- Monitor model life cycles with runtime diagnostics.

Premium support services

Enjoy comprehensive support for all Latent AI products, including first-, second-, and third-line assistance.

- Immediate response with communication channels including Slack / Teams, email, and telephone.

- Regular SDK tune-up to ensure all code libraries and recipes are updated and users are trained to use the latest features.

- Operational check of fielded models to ensure successful model deployment at peak performance on target hardware.

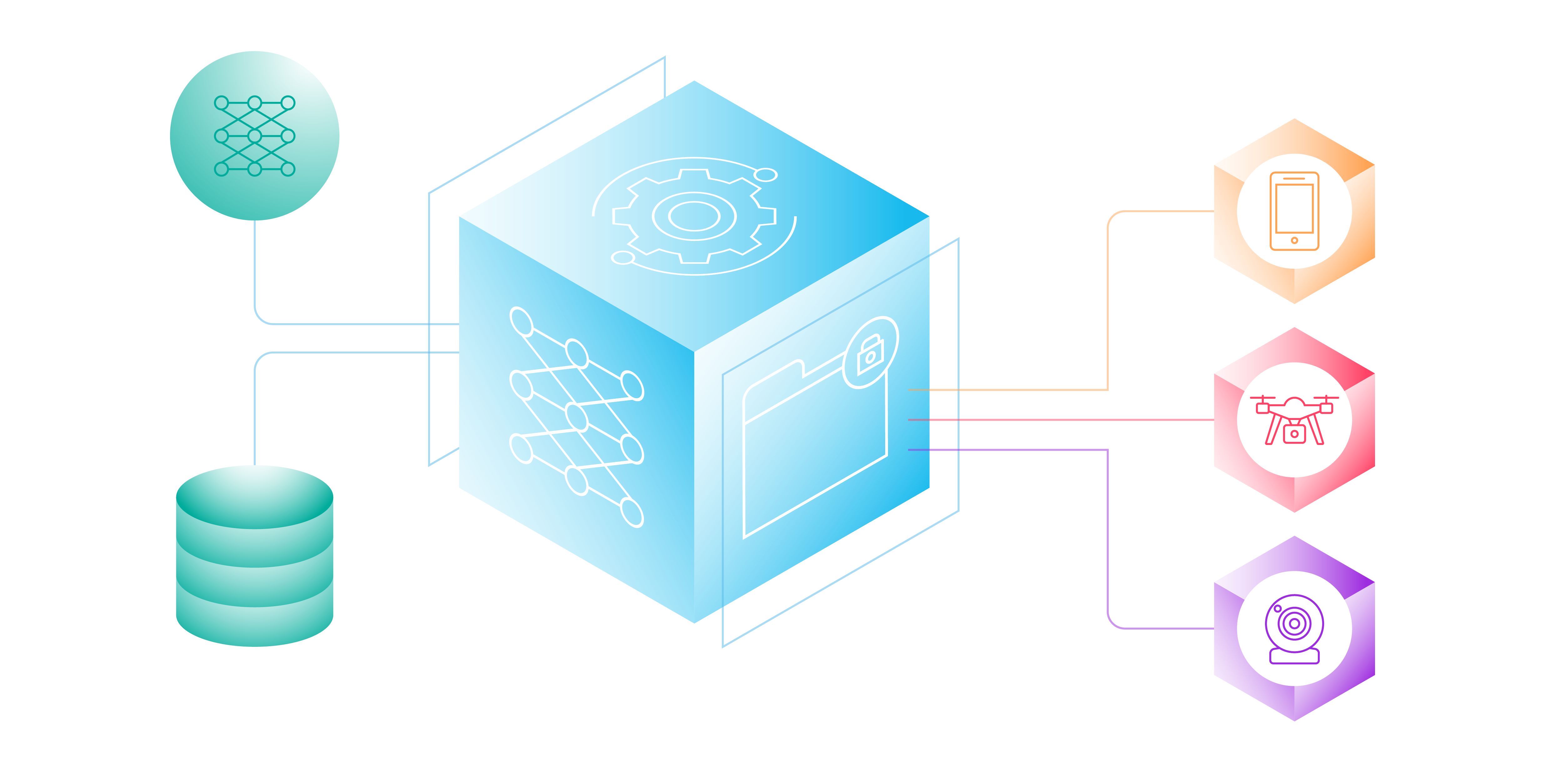

INtegrations

Connect to Latent AI through our technology integrations.

Resources

Gain technical and industry insights from our team.

FAQ

Frequently Asked Questions

Learn how Latent AI empowers ML engineers, data scientists, and software developers to build production-ready edge AI.

The LEIP MLOps platform streamlines every stage of the machine learning workflow, including:

- model design

- model development

- model training

- model deployment and serving

- model versions management

- model monitoring

We’ve taken the complexity out of MLOps for edge AI applications through pre-tested model-hardware configurations and automating optimization for your target hardware.

Latent AI provides software engineers and data science teams with tools that automate and simplify the complex and time consuming work of model testing and configuring for edge hardware. Our end-to-end machine learning workflows expedite experiment tracking, model management, and help you quickly deploy models to various hardware targets.

Latent AI easily integrates into popular ML frameworks like Tensorflow, PyTorch, and ONNX.

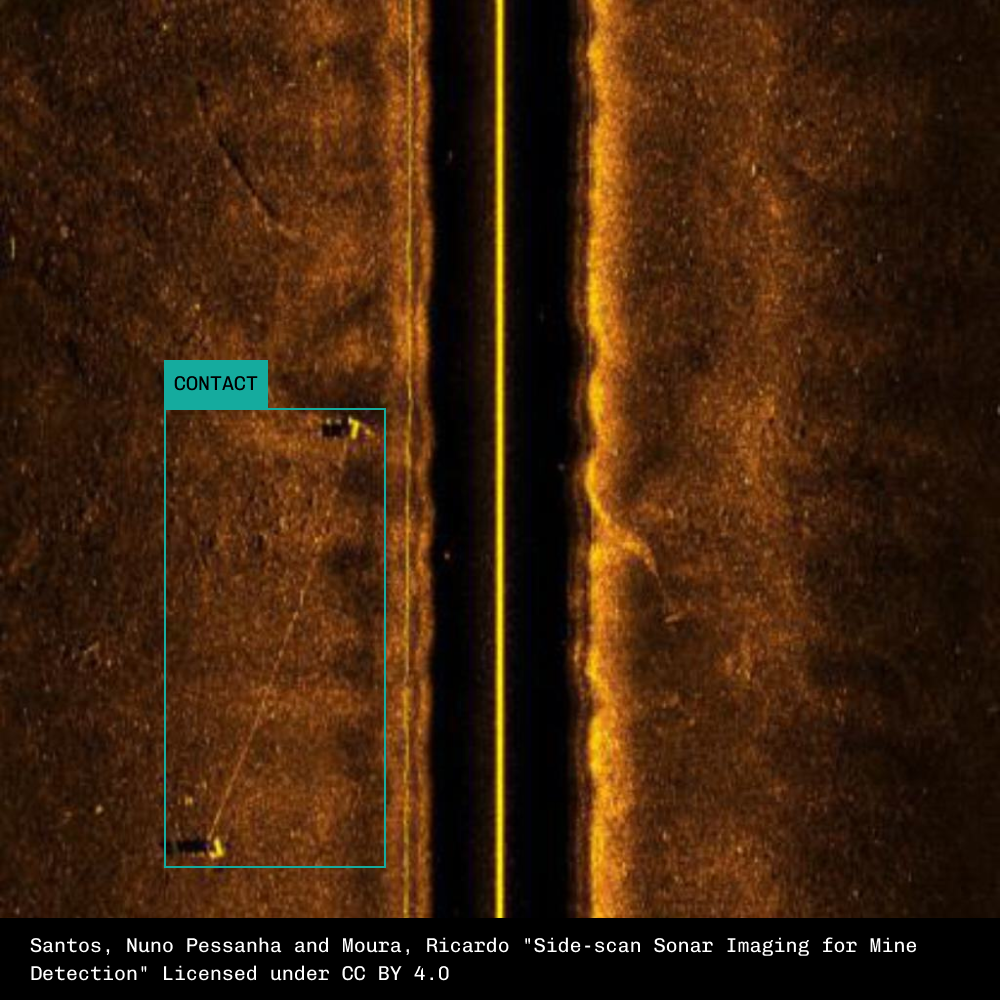

Latent AI supports multiple sensing modalities, including electro-optical, infrared, radio frequency, and sonar for computer vision tasks like detection, classification and segmentation on edge devices. Latent AI’s tools optimize performance for real-time image and video processing.

Latent AI provides a standardized MLOps pipeline that seamlessly integrates with your existing tools. Our machine learning framework provides smart tools that simplify complex machine learning making it easy for beginners to start and for experts to work efficiently.