The new frontier of AI: How Latent AI is leading the shift from cloud to edge

As artificial intelligence continues to reshape industries across the globe, a quiet revolution is underway in how and where AI processing takes place. The traditional cloud-centric approach to AI deployment is giving way to a new era – edge computing. This shift is particularly crucial for mission-critical applications in defense, commercial, and emerging technology sectors, where real-time decision making and tactical advantage are paramount.

In just the last two years, over 90 percent of the world’s data has been generated, with estimates suggesting 1.7MB of data will be created every second for every person on Earth by 2025. This data explosion has exposed the fundamental limitations of cloud computing: latency, bandwidth constraints, and privacy concerns.

“The reality is that data is being generated everywhere, but computing power isn’t evenly distributed to process this exponential explosion of information,” explained Jags Kandasamy, CEO of Latent AI. “Organizations can’t afford to wait for data to travel to distant cloud servers when split-second decisions need to be made at the edge.”

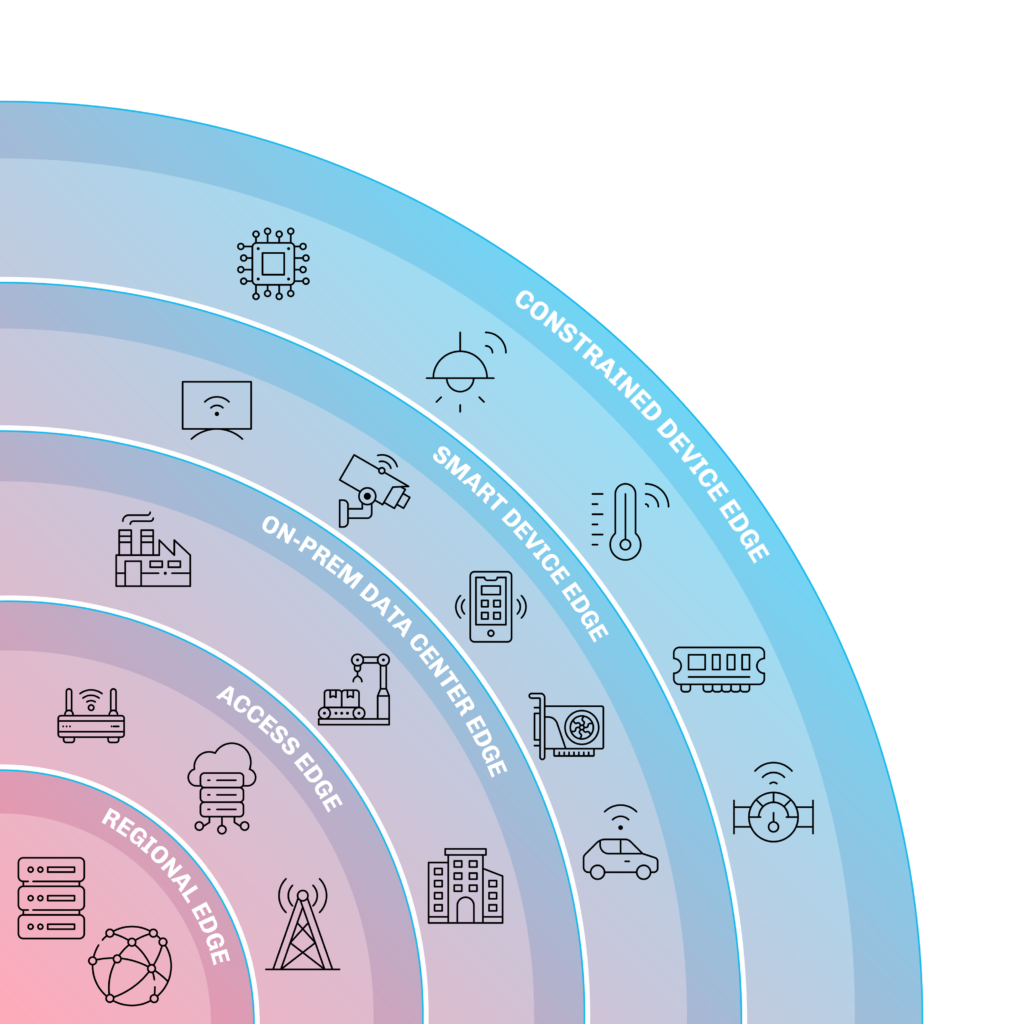

Latent AI’s groundbreaking approach, dubbed Adaptive AI, represents a fundamental reimagining of how AI systems operate. Dr. Sek Chai, Co-founder and CTO, provides a profound insight into this transformation: “We keep saying edge and cloud are binary descriptions, but in fact, there’s a lot of continuum between them. From a scaling perspective, it is not just a binary cloud versus edge, but everywhere along the continuum where you can put AI, and you don’t have to choose.”

Unlike traditional AI models that operate like engines constantly running at full power, Adaptive AI works more like a sophisticated hybrid engine, dynamically adjusting its computational resources based on immediate needs. The technology transcends traditional computational boundaries, enabling dynamic power management, environmental adaptation, and performance across diverse hardware targets while maintaining critical safeguards.

This approach addresses the complex challenges of AI deployment, especially in high-stakes environments like military and critical infrastructure. By providing transparent decision-making processes, built-in ethical constraints, and robust protection against adversarial attacks, Adaptive AI offers a more nuanced and responsible approach to artificial intelligence.

The real-world impact of this technology is already transformative. In the U.S. Navy’s Project AMMO, Latent AI achieved a remarkable 18x reduction in AI model update times, demonstrating the potential of edge AI to revolutionize operational capabilities.

“The edge is incredibly hard. It’s heterogeneous,” Kandasamy emphasizes. “Building AI in the cloud is challenging. But using AI in a battery-powered device, disconnected from the cloud, is fundamentally different. We’re solving these challenges by making edge AI deployment practical, efficient, and secure.”

The future of AI is not about choosing between cloud and edge, but creating an intelligent computational ecosystem that dynamically distributes workloads. As Dr. Chai articulates, “From a scaling perspective, it is not just a binary cloud versus edge, but everywhere along the continuum where you can put AI, and you don’t have to choose.”

This approach offers a new paradigm of computing that reduces latency, enhances privacy, lowers bandwidth costs, improves resilience, and enables real-time decision-making. With the release of LEIP Design, Latent AI is dramatically simplifying edge AI deployment by offering over 1,000 pre-qualified model recipes. This breakthrough reduces the time and expertise required to implement AI at the edge, democratizing advanced AI capabilities.

In a world generating exponential amounts of data, the ability to process information at its source is becoming increasingly critical. Latent AI is not just developing technology; it’s architecting a new approach to computational intelligence that puts processing power exactly where it’s needed most.

“As we move forward, our focus remains on enabling organizations to deploy AI capabilities where they’re needed most – at the tactical edge where real-world decisions are made,” Kandasamy concluded. “The future of AI isn’t in the cloud – it’s at the edge, and we’re making that future possible today.”

The true potential of artificial intelligence lies not in distant servers, but in the devices all around us. Welcome to the new frontier of edge computing – where intelligence knows no boundaries.