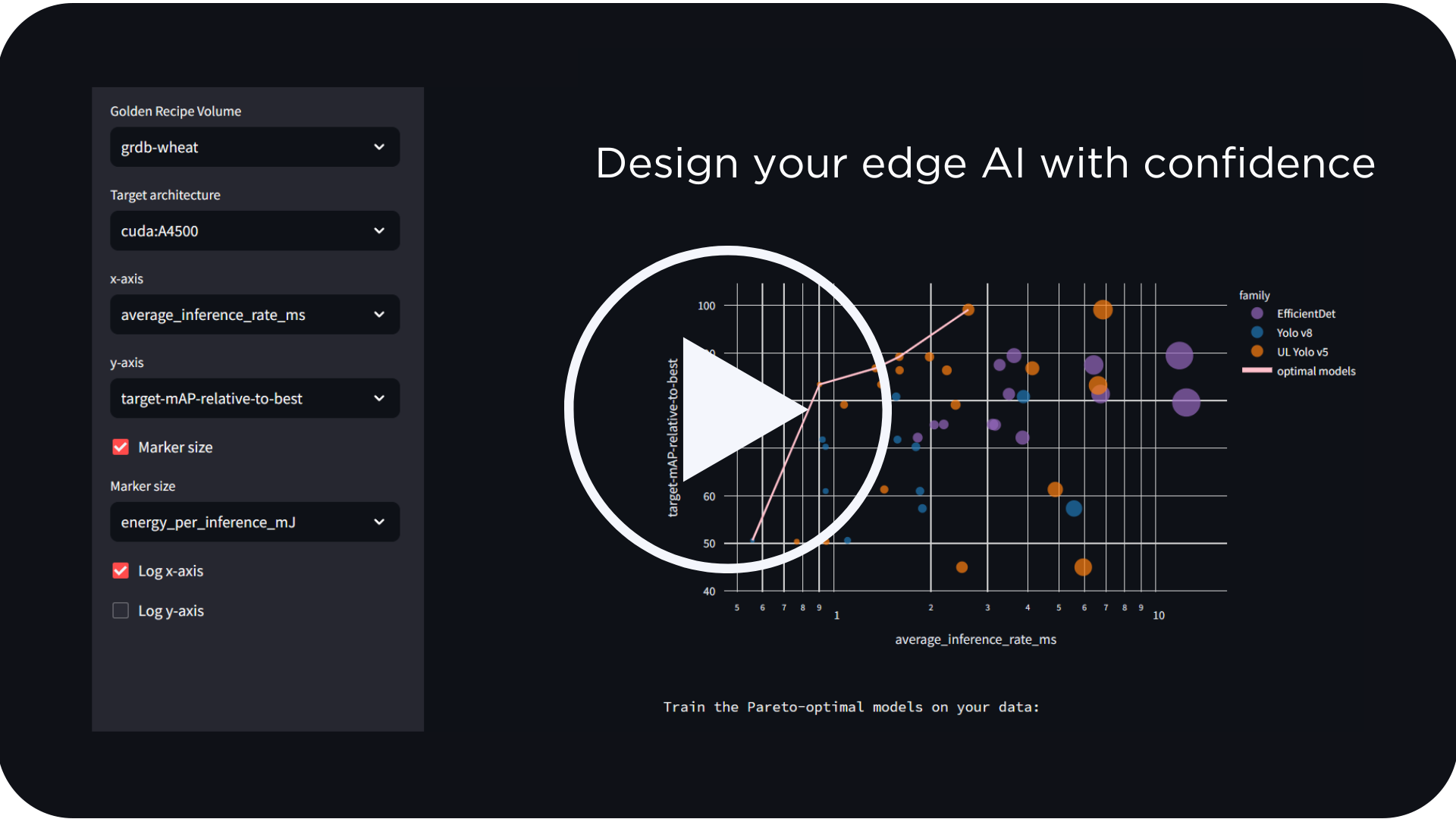

See how LEIP enables you to design, optimize and deploy AI to a variety of edge devices–at scale. Schedule a Demo

Take the hard work out of your edge AI development with Latent AI

Edge AI is crossing the chasm!

For a few years now, we have been witnessing the success stories of early edge AI adopters like autonomous vehicles and intelligent voice assistants. Now, enterprises can capitalize on the value of Edge AI and create tremendous revenue opportunities through the smart edge.

Edge AI enables real-time low-latency user experiences while providing increased information security and privacy for users. For mission critical systems, Edge AI ensures automated decision making without relying on network connectivity. Businesses can take advantage of tremendous technological advancements in semiconductor chips, IoT sensors and ML algorithms to enable AI on the edge. Additionally, this reduces cloud and infrastructure costs, and is more climate friendly by reducing cloud and networking energy usage.

But Edge AI is really hard.

The traditional ML pipeline involves training and inference on the cloud, and is completely hardware agnostic with no limits on compute and memory. Deployment of Edge AI involves a critical step of inference “handoff” to a highly resource constrained edge device. During this process, models trained on the cloud need to be highly optimized and customized for a particular hardware target. But this can be a very time consuming and frustrating process. Hardware vendor tools for ML optimization are notoriously difficult to use, and produce inconsistent, unreliable and unexplainable models. This is one of the main reasons why Edge AI projects fail.

How can Latent AI help your Edge AI journey?

The Latent AI Efficient Inference Platform™ (LEIP) is a modular, fully integrated edge AI development platform that optimizes the compute, energy consumption and memory allocation of tactical edge devices without requiring changes to prior model infrastructures or edge system designs. LEIP enables rapid, highly scalable and adaptable AI application development at the tactical edge regardless of framework, operating system, architecture or hardware.

How can European companies take advantage of Latent AI’s capabilities?

To support enterprises in the European market, we are offering Latent AI in collaboration with an easily accessible Europe based compute platform. We are excited to announce that we have partnered with Green AI Cloud to enable this opportunity.

We picked Green AI Cloud for three reasons –

- Compute power – Green AI Cloud is the leading top speed compute platform in Europe; more than 100-150 faster than the standard legacy data centres available today.

- Security – Green AI Cloud is a security level 4 facility and in addition classified as security level 3 by the Swedish official defence contracts department (FMV).

- Sustainability – Green AI Cloud compute platform is the global leading platform when it comes to sustainability. It uses only renewable energy sources (wind and hydropower) and reuses the excess heat from the server hall to fuel a production plant that produces wood pellets (biofuel for domestic heating). This makes the entire process CO2 negative according to the Greenhouse Gas protocol. By using Green AI Cloud compute platform, you actually reduce global CO2 emissions!

Latent AI Efficient Inference Platform (LEIP), allows compression and optimization of neural nets running on any hardware target in the edge continuum. With its Adaptive AI℠ technology, LEIP compresses conventional AI models below 8-bit integer precision without a noticeable accuracy change, with results of offering more than 10x memory size savings and 3x inference speed improvements with software-only optimizations.

This partnership allows customers to train their AI models in a sustainable manner in Green AI Cloud and optimize them for Edge deployment in a seamless manner. With Green AI Cloud and Latent AI, you now have access to a high performance ML pipeline that is also incredibly good for our planet! Email us at info@latentai.com to learn more about how you can contribute to a sustainable future!!