DevOps for ML Part 3: Streamlining edge AI with LEIP pipeline

Part 1: Optimizing Your Model with LEIP Optimize

Part 2: Testing Model Accuracy with LEIP Evaluate

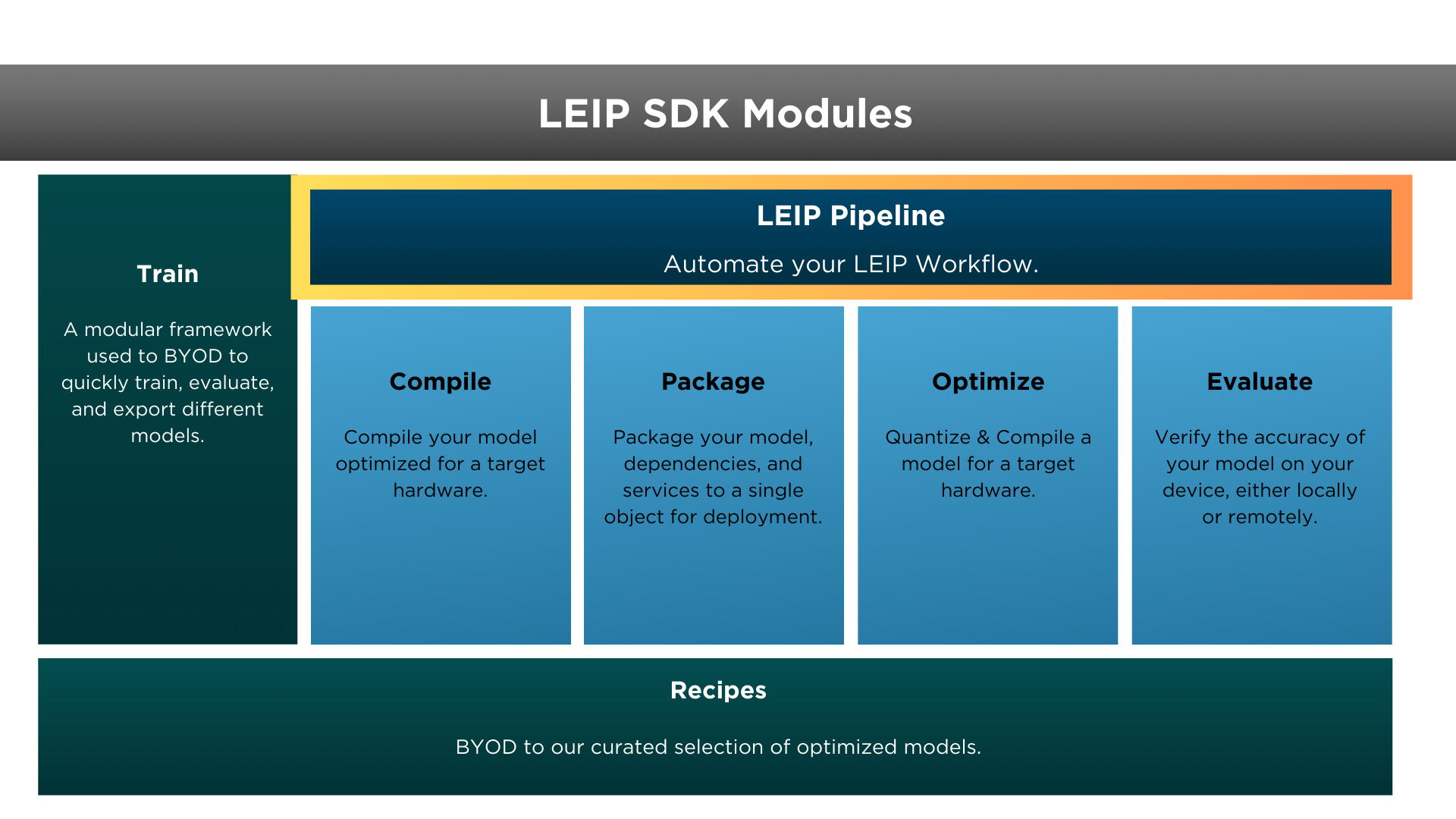

Welcome to Part 3 of our ongoing DevOps For ML series that details how the components of LEIP can help you rapidly produce optimized and secured models at scale. In Parts 1 and 2, we have already explored model optimization and accuracy testing with LEIP Optimize and LEIP Evaluate. Now, get ready to LEIP to the next level with LEIP Pipeline!

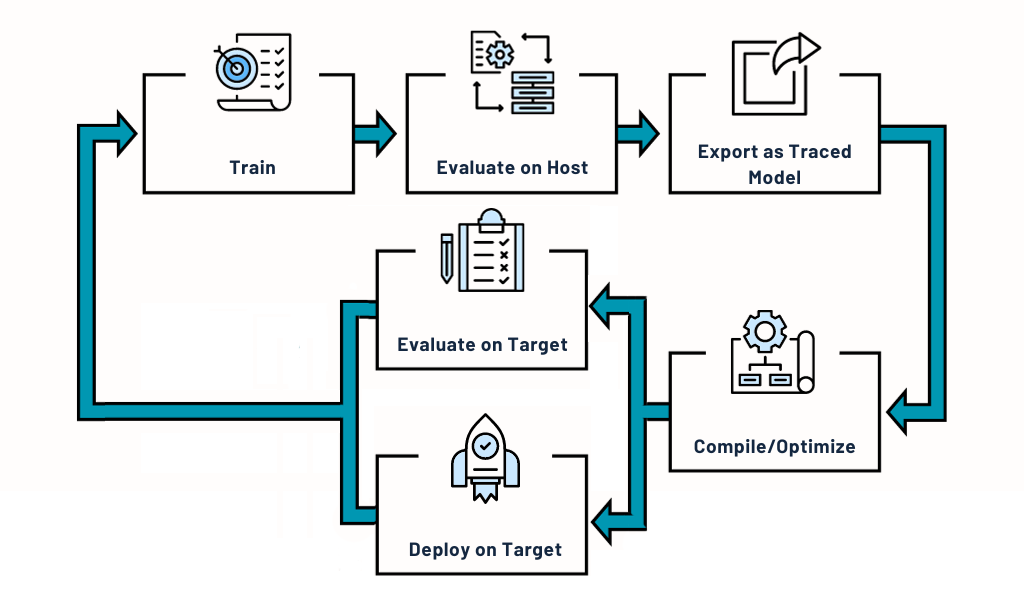

LEIP Pipeline helps you automate key edge AI features by chaining together LEIP module commands and output into an easily shareable workflow. Imagine effortlessly automating critical edge AI tasks, seamlessly linking LEIP module commands into a highly shareable workflow. With LEIP Pipeline, you are in control. You can run a single inference, evaluate a a model’s performance before and after it is optimized for low SWaP devices, or quickly test different hardware targets for peak proficiency. And you can dramatically lower the time it takes to find the best combination of model and hardware to meet your specific requirements and use case. Say goodbye to tedious trial and error and hello to a faster, smarter way to find that perfect model-hardware combination.

LEIP Pipeline is versatile and user-friendly. It works smoothly through a command line interface or Python API call and enables a user to automate and execute multiple LEIP tasks effortlessly. Tasks are defined and configured using a file that can be in a YAML or JSON format, or you can use the Python API to construct them. All the configuration of commands can be grouped very easily. And these can then be applied to the desired models and be shared with anyone to run. Commands can be grouped with ease and shared to allow for a seamless execution.

LEIP Pipeline lets you run experiments involving one or multiple uses of LEIP Compile, LEIP Optimize, LEIP Evaluate, or LEIP Package, across one or several models. You can explore a wide range of experiments across one or multiple platforms, including:

- Compile or optimize a model with a given configuration.

- Evaluate a model, optimize it, and then evaluate the optimized model to compare metrics.

- Optimize a model for different targets and hook up with edge devices running LOR servers for real-world inference tests, gaining real-time insights into your model’s performance.

- Optimize a model using distinct optimization settings, like the quantization method or calibration method, and evaluate them.

- Optimize and evaluate several similar models to compare metrics.

Our documentation offers comprehensive API references and a collection of downloadable YAML configurations. For those seeking in-depth technical insights, a detailed JSON schema is readily available for exploration.

If you need a way to quickly add AI to your application, rapidly prototype models for different hardware, or simplify your model design and delivery with dedicated DevOps for ML, get in touch with us today at info@latentai.com.

For Documentation of our LEIP SDK User Guide and LEIP Recipes, visit our Resource Center.