DevOps for ML Part 2: Testing model accuracy with LEIP evaluate

Part 1: Optimizing Your Model with LEIP Optimize

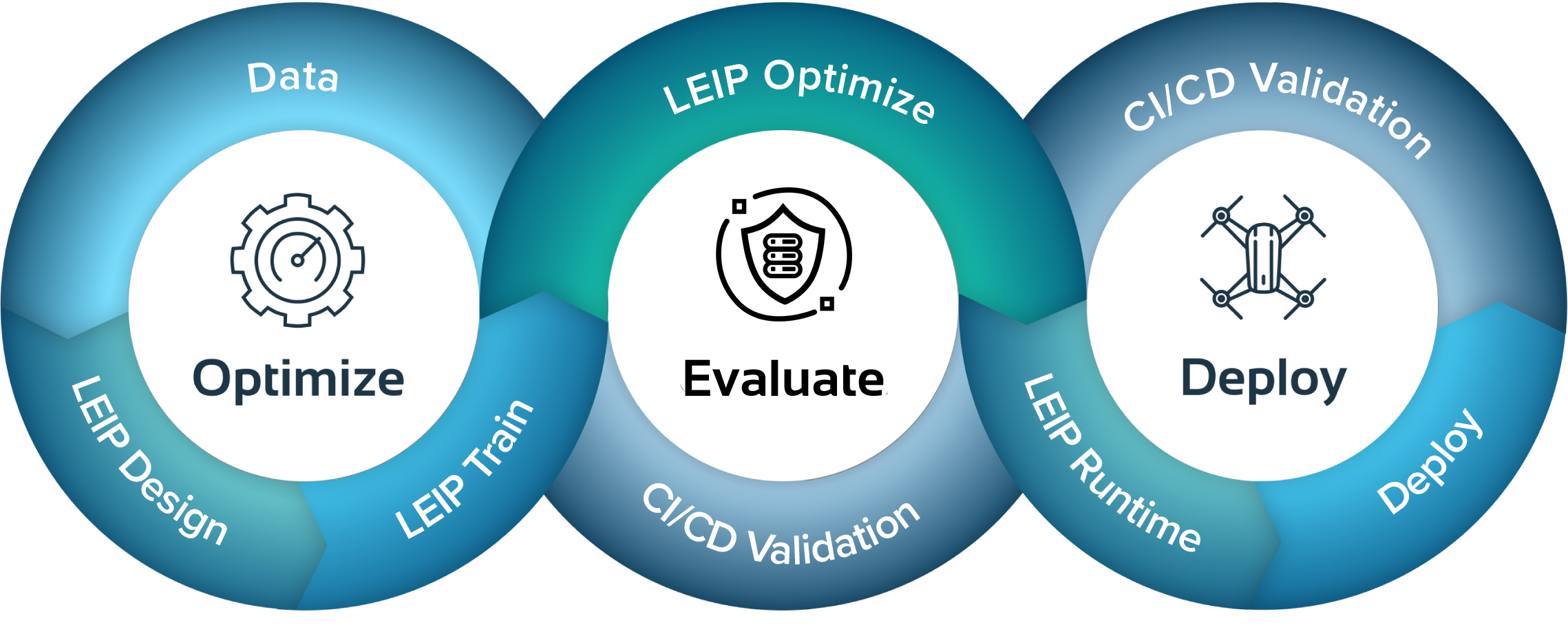

The Latent AI Efficient Inference Platform (LEIP) SDK creates dedicated DevOps processes for ML. With LEIP, you can produce secure models optimized for memory, power, and compute that can be delivered as an executable ready to deploy at scale. But how does it work? How do you actually go from design to deployment?

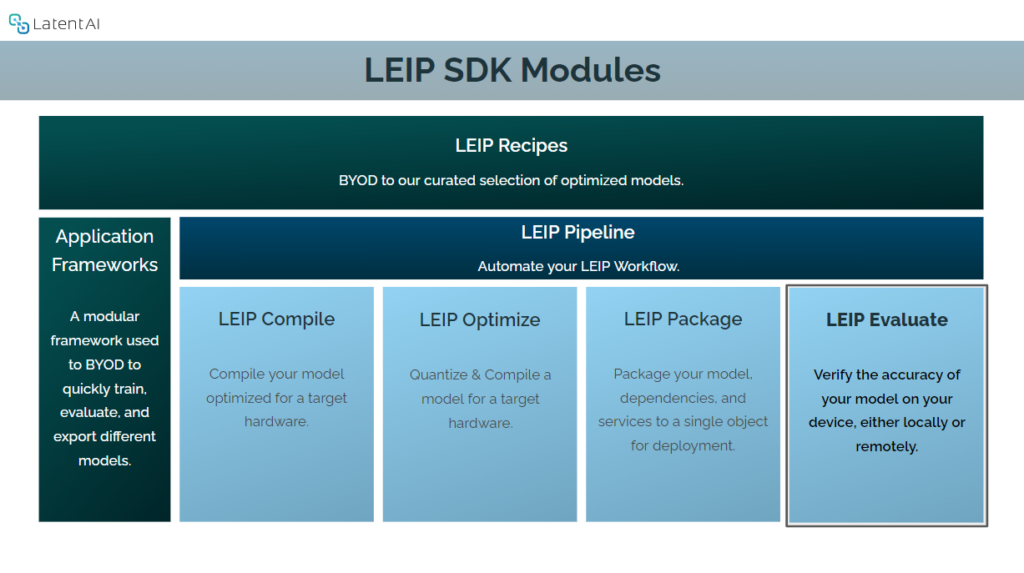

The previous post in our ML Lifecycle series walked through the first step of producing a model with LEIP Optimize, one of the LEIP SDK’s core modules. It optimizes models for memory, power, and compute while also compiling them into an executable package for easy deployment. However, models must also be evaluated for accuracy and authenticity after they have been trained, exported, and optimized.

LEIP Evaluate

LEIP Evaluate allows you to verify the accuracy of a model on your desired target devices. Whether you are developing your model on-premise or in the cloud, LEIP Evaluate lets you run an evaluation pipeline in your SDK container, while performing remote inference on any number of remote edge devices.

LEIP Evaluate can assess the accuracy of a compiled and optimized model across several stages in the LEIP tool chain including before and after running LEIP Compile and LEIP Optimize using the the following evaluation methods:

- Test and Evaluation (T&E) – When the model is being developed/trained.

- Verification and Validation (V&V) –When the model is being updated/refined during deployment.

T&E builds trust and V&V maintains trust in the deployed AI. Trust must be established for both developer and operator because it is important to establish the data and model provenance.

LEIP Evaluate takes in two inputs to begin the process. The first input is a path to a model folder or a file. The second input is a path to a testset file. The model is loaded into the specified runtime and performs inference of the input files specified in the testset. This results in LEIP Evaluate generating two outputs: the accuracy metric along with information about the number of inferences per second. The accuracy metric shows how close the evaluated model is to the original uncompiled model. The number of inferences per second measures how many predictions a machine learning model can make in one second. Both the accuracy metric and the number of inferences per second are indicators of the performance and efficiency of a model.

LEIP Evaluate may be used entirely within the SDK container or may be used to perform evaluation using remote inference. While evaluation within the SDK is limited to testing against the hardware architecture of the host computer, remote inference allows a user to evaluate against the hardware architecture of the intended remote edge device. For remote inference, a process called the Latent AI Object Runner (LOR) is installed on the device under test. The compiled and optimized model will be passed directly to LEIP Evaluate when the function is executed, and LEIP Evaluate will communicate with the LOR to copy over the model, send samples for inference, and receive the results for post processing and scoring.

LEIP is an end-to-end SDK to train, optimize, and deploy secure NN runtimes all within the same workflow. NN runtimes can be more efficient and robust when optimization and security are implemented. In addition, NN runtimes can be integrated with security protocols that protect against reverse engineering.

Now that we’ve introduced LEIP Optimize and LEIP Evaluate, the next module in our series is LEIP Pipeline, which allows a user to perform tasks such as optimization, evaluation and more sequentially.

For Documentation of our LEIP SDK User Guide and LEIP Recipes, visit our Resource Center. For more information, contact us at info@latentai.com.