Solving Edge Model Scaling and Delivery with Edge MLOps

The sheer amount of data and the number of devices collecting it means sending it to the cloud for processing is simply too slow and not scalable. Processing has to move closer to the source of the data at the edge. But getting AI models to work on edge devices fails far more often than it works. Putting models on resource constrained devices is a complex, lengthy and often manual process requiring numerous iterations to reduce their size and improve their efficiency, And that process has to be dedicated and repeated for different models and target hardware.

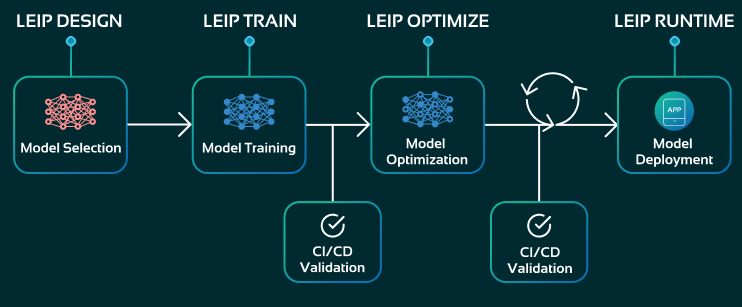

But what if those pain points could be eliminated? We developed LEIP, the Latent AI Efficient Inference Platform, to give organizations what they’ve been missing – a single tool that provides a repeatable and scalable path for delivering optimized edge models quickly. With LEIP, the machine learning development process is cast into a software development workflow so that developers can build model trust using familiar validation processes. LEIP lets you train your model and then get it all the way to edge devices reliably and at scale with compressed, ultra-efficient, and secured models designed to work on edge devices.

LEIP is model, application, and hardware agnostic. It comes with customizable templates called Recipes that are pre-qualified to your target hardware and reduce maintenance and configuration down to a single command line call. Because there are no worries about the target platform, the iterations are much faster. Models that used to take months to train and configure can now be edge optimized in hours.

LEIP can take your AI model from development to device simply, reliably, and securely with a dedicated and principled edge MLOps workflow designed to support rapid prototyping and deployment. Contact info@latentai.com to schedule a demo today.