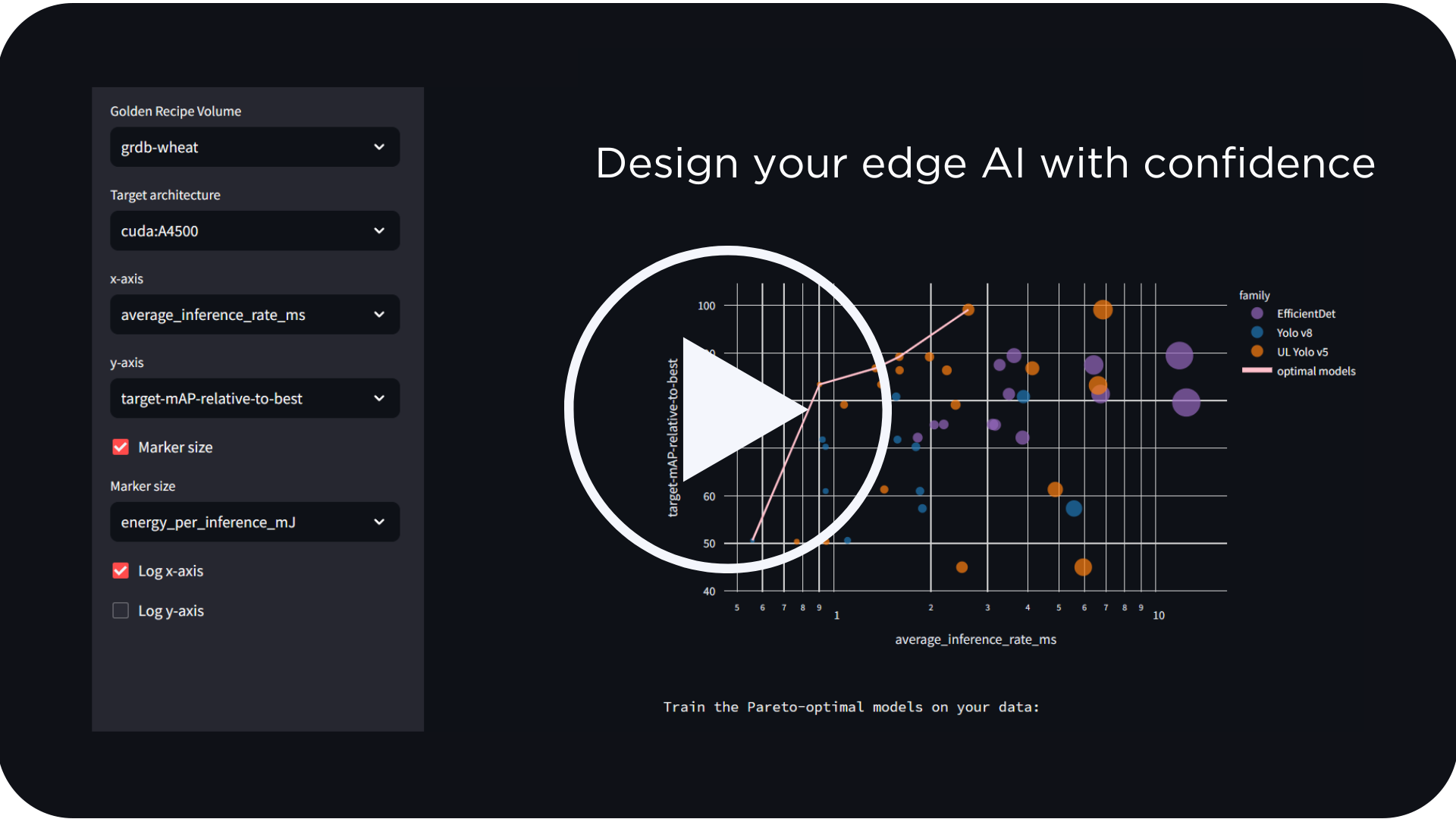

See how LEIP enables you to design, optimize and deploy AI to a variety of edge devices–at scale. Schedule a Demo

Edge AI most often fails because traditional models are simply too big or too slow to run on resource constrained devices. By design, standard models either compute all parts of their neural network or none of it. When AI models run continuously, power drains rapidly. And the larger the model, the heavier the hardware necessary to support it. Think of a warfighter who needs to turn their oversized AR/VR headset off to conserve power and physical stamina both. Meanwhile, relying on the cloud for computing increases latency and slows responsiveness. Considering how rapidly edge device data collection is growing, and the importance of what those devices are responsible for, the constraints of placing standard AI on edge devices is only going to grow.

The real world needs AI that can deliver insights and results far faster than traditional methods. And it needs models that can work when power is limited, or even when the device is disconnected from a network. Those needs extend across a wide swath of industries including manufacturing, agriculture, oil and gas, and any that can benefit from more efficient data gathering and processing at the edge to solve computer vision problems.

Unleashing edge computing with Adaptive AI

AI that can react to changing environmental conditions is key to evolving what it can do. Adaptive AI represents a fundamental shift in the way AI is trained. Traditional models have inference processing that is defined during training and can’t be adjusted later. What that ultimately means is that every part of your neural network needs to fire to arrive at an answer. If your deep neural network has a million parameters, your runtime engine would compute a million parameters.

Obviously, that is a challenge for compute constrained devices. Adaptive AI resolves that by enabling the model to react dynamically and only rely on the parts of its neural network that are required to process the data being collected. Adaptive AI is exactly what it implies – artificial intelligence that can change to meet new conditions. Adaptive AI enables edge models to adapt and self-adjust to their workloads depending on their needs and environments. Adaptive AI uses attention and context to utilize only the parts of its neural network that it needs to, all which can be done locally on compute constrained edge devices.

Context-based power and memory management

This short proof-of-concept demonstration shows how Adaptive AI unleashes the power of edge computing with context-based power and memory management.

It’s a person recognition model that only activates when triggered by motion. When there is no motion, the model throttles down. The AI model adapts and self-adjusts to its workload depending on its needs and environments. Only when a person enters the scene does the more complex AI model run to detect other items. The larger model will only trigger when it is needed, which saves power for later and extends battery life.

Flexibility that maintains results

Adaptive AI is designed for edge AI success because it fundamentally addresses three major concerns:

- Robustness – Can the model maintain its accuracy even when run on compute and power constrained edge devices?

- Agility – Can the model adapt to changing circumstances? Can it help secure itself? Can it power down or up based on need?

- Efficiency – Can the model be trained quickly enough to be relevant? Are the insights being delivered fast enough?

Adaptive AI answers these concerns, and more. It represents a new way to run your neural network that dynamically minimizes the working footprint for both memory and compute horsepower and finally creates the opportunity for sustained edge AI success. It’s flexibility that maintains results while building in speed and sustainability. Adaptive AI makes the edge AI truly intelligent and dynamic.

Adaptive AI enables far faster and more responsive edge AI that can operate independently while conserving power and maintaining accuracy. The applications for the military and other industries who need smarter and faster edge AI are only going to grow. Adaptive AI frees application developers from edge device constraints and lets them leverage models that require less power, are more lightweight, and run faster. ML Developers can train larger models that are more accurate because attention and context helps guide the neural network pathways. And MLOps and system integrators who bundle their AI processes into larger solutions can leverage Adaptive AI to create models far easier to scale.

For more information about Adaptive AI, see:

The Next Wave in AI and Machine Learning: Adaptive AI at the Edge

The Adaptive AI™ Approach: Run Deep Neural Networks with Optimal Performance

Or to learn more about how Latent AI technology can help you capture the latent knowledge waiting to be discovered in your training data, and do it faster, contact us at info@latentai.com.