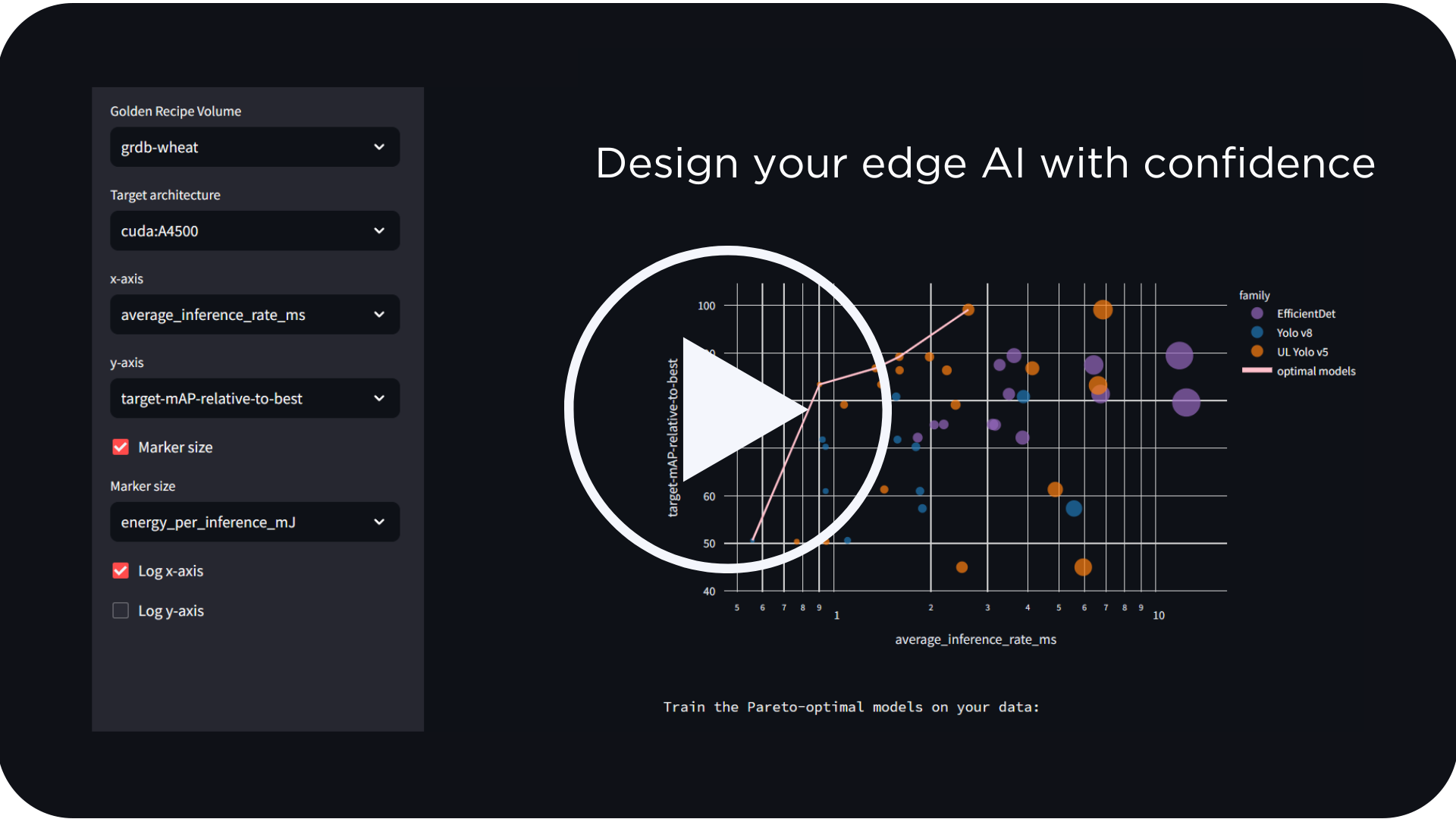

See how LEIP enables you to design, optimize and deploy AI to a variety of edge devices–at scale. Schedule a Demo

If you are an AI engineer dissatisfied with the speed or power consumption of your neural network inferences and believe there are no solutions available for better performance….then keep reading!

I’d like to introduce a new way to run your deep neural networks at their most optimal point. It is dynamic, context-aware, and flexible. It is Adaptive AI℠.

This article provides some insights into a new framework and tooling to go beyond what you can currently achieve with pruning and quantization. As a follow up to an earlier article, The Next Wave in AI and Machine Learning, I will address the third tenet of Adaptive AI (Agility) with a prototype application example that highlights a new class of efficient AI on the edge.

Status Quo is So Yesterday

Today’s inference processing is statically defined during training, and you are expected to compute every part of your neural network in order to get an answer. If your deep neural network has a million parameters, your runtime engine would compute using a million parameters.

However, this status quo approach for neural network inference is not optimal from a learning and computational perspective. Just as the biological cognitive task does not necessitate all physical neurons in your brain to fire in order to perform a task, you do not need to process all parameters in your deep network to get a quality answer.

Bear in mind that you cannot just take today’s trained network and start dropping neurons from inference processing. If you do, you will likely find that the neural network algorithmic performance (i.e. accuracy) quickly drops exponentially towards zero. This happens because today’s training approaches do not consider how inferences can be processed differently at runtime.

Adaptive AI℠ is the Future

Adaptive AI offers a new way to run your neural network that dynamically minimizes the working footprint for both memory and compute horsepower. Based on the context of the environmental conditions, such as the quality of the input signal and battery life of your system, you can throttle the network as needed to achieve the desired performance/power target.

Such a formulation requires retraining the network in a way that offers runtime flexibility during inference. Ultimately, the neural network training process is no longer focused on static models, but rather, it will focus on a larger design space that involves runtime conditions as well.

Adaptive AI networks are not more difficult to train than normal networks. We have shown results for a number of complex tasks including objection classification and detection. Other academic approaches, such as GaterNet and Slimmable Networks, have been recently published, but none have provided results for complex high dimensional problems in video processing. We offer a glimpse of our results below.

Adaptive AI means Agility

In our video-based gesture recognition example, the adaptive neural network starts with a low utilization level to save power. As new video data is processed, the adaptive neural network can throttle up the number of neural network parameters to compute in order to reach the desired confidence threshold. Everything is automatically controlled by the neural network. When the neural network inference is done, the system can throttle back down to use lower compute resources.

Our results show that peak accuracy is comparable to the equivalent vanilla (non-adaptive) architectures. With Adaptive AI, the system is highly agile and can self-regulate to minimize computational needs.